The hiring process has innovated, and leading that effort is artificial intelligence (AI) and machine learning (ML). But how does this technology stand against the test of implicit bias?

Once upon a time, artificial intelligence (AI) and machine learning were two ambiguous terms. Now, these data-driven processes automate tasks across industries as well as in households around the world. AI is used to build new platforms and cultivate diverse datasets and is becoming more prevalent in talent acquisition. But is human bias, whether conscious or subconscious, training these AI hiring tools to discriminate against hopeful applicants according to age, race, and gender?

AI and the Hiring Process

The adoption of artificial intelligence by businesses increased by 270 percent in just four years and is projected to reach $266.92 billion by 2027. Interestingly enough, the human resources field is contributing to this growth. With the future of work trending towards digital and remote, talent acquisition divisions are overloaded with resumes and applications. Fifty-two percent of Talent Acquisition leaders say the most challenging part of the recruitment process is identifying the right candidates from a large applicant pool; it's obvious they could use some help.

That's where AI and machine learning software come in. AI in the hiring process is helping Talent Acquisition Managers (TAs) automate an array of time-sucking tasks. From mundane administrative tasks to creating and improving standardized job matching processes and speeding up the time it takes to screen, hire, and onboard new candidates.

What AI Hiring Software Can't Fix

These days, quite a few AI hiring software applications assist with the different hiring functions, recruiting, onboarding, retention, and everything in between. These tools must have diverse applications and hiring decisions to learn from to combat this bias. Using training data, which is the initial set of data used to help machine learning understand sophisticated results, can help these apps make informed decisions on who the best fitting candidate is for open roles.

Kurt and Angela Edwards created Pyxai because they believed that soft skills and cultural traits are crucial metrics that companies should prioritize. With this standardized metric, recruiters and hiring managers can measure them fairly across each candidate. Kurt Edwards insists this will result in more suitable hiring choices, "Hard skills can be learned, but soft skills are harder. That's what to look for and measure."

Creating a culture of inclusion should be a part of everyone's job, especially HR professionals wanting to retain diverse talent. The action cannot be solely left to the recruiting tools, however. For example, pictures of predominantly white teams can deter people of color from applying to their open roles. The use of universal jargon in job postings can unintentionally exclude certain genders, and the requirement for a specified number of years' experience can perpetuate ageism. When considering AI's impact on hiring, it's essential to understand the dynamics between words, images, and inclusivity.

Types of Artificial Intelligence Hiring Bias

Training data, machine learning, and natural language processing enable hiring tools to understand human patterns. But if the people making those decisions have biases, then the data may already be tainted.

Kurt Edwards thinks this overlooked bias hurts the talent funnel. "There were people with good talent that just didn't make it into the funnel because there was a lot of unconscious bias in the recruiting industry." For example, the halo effect can cause interviewers to focus on a candidate's shining qualities despite detrimental shortcomings. Actual bias built into AI hiring software applications can take many forms.

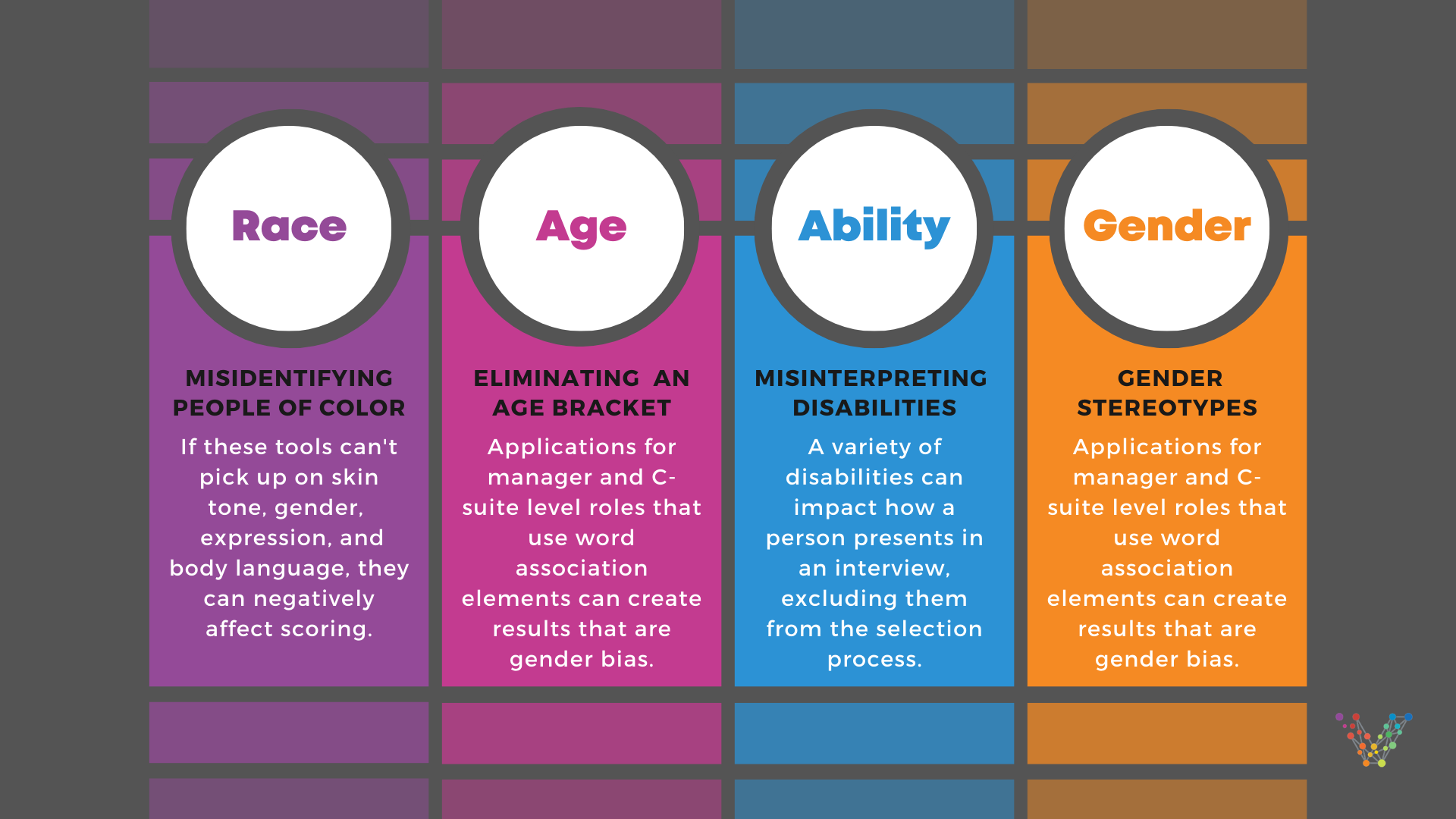

Misidentifying people of color

Hiring and interview tools sometimes use facial-recognition software to screen candidates for persona and fit. But if these AI hiring tools can't pick up on skin tone, gender, expression, and body language, they can negatively affect scoring.

Reinforcing gender stereotypes

Gender stereotypes in Corporate America have been prevalent for centuries and mainly to the detriment of women. AI applications for manager and C-suite level roles that use word association elements can create results that are gender bias. It leads to occupational segregation where women are stuck in underpaid, more junior roles.

Eliminating an entire age bracket

Although people don't usually add their birthdate on their resume, the number of years of work experience generally aligns with one's age. Programming AI hiring software to weigh this metric to measure skill can present results that age entire demographics from even being considered.

Overlooking the nuances of disability

Enunciation, body language, and facial expressions are all potential characteristics that are scored by AI hiring software. A wide variety of disabilities can impact how a person presents during a phone or video interview, which could exclude them from the selection process.

Luckily, because of Joy Buolamwini, founder of the Algorithmic Justice League, there's a name for this algorithmic bias. It's called the "coded gaze," and the way to continually eliminate it is through the use of fair, equitable, and inclusive AI hiring practices.

Solving for Issues in AI Hiring

Angela Edwards of Pyxai says that there has been a paradigm shift in recruiting. The new workforce generation expects companies to have modern training models, better DEI efforts, and higher ethics in general. This shift may be what the HR industry needs to create more equitable opportunities for underrepresented people.

Buolamwini's call to action in her TEDx talk still rings true - companies need more inclusive coding. When coding is inclusive, it reflects who is doing the coding and the purpose of the code. With the help of engineers (whether in-house or third-party), HR professionals can be intentional about how they use the training data that goes into AI hiring applications. They can choose which details (like age, race, and gender) of a candidate's profile to consider or not consider when configuring the tools.

Another way to help solve potential AI hiring bias is to test workplace software every quarter. HR professionals can do this by using AI hiring software to rank and score the candidates and then analyze the results to see if the algorithm favored any demographics. If an over-representation of a particular race, age, or gender emerges, management and leadership should reevaluate the algorithms.

Action Items

Implicit bias training should include actionable solutions and directives that won't fall by the wayside in the business of work. Shirin Nikaein is the creator of Upful.ai, a software that coaches employees to give unbiased performance reviews. She advises that the best approach to bias training is to be as non-accusatory as possible. When people are on the defensive, it won't resonate as much. Managers should choose training courses with a gentle yet effective approach.

Remote and digital work has embedded AI and machine learning further into our lives, marking a unique opportunity in the hiring space. A simple line of code can significantly impact employment and has severe implications for both the candidate and the businesses that hire or pass on them. Machine learning and the human touch must both align with the mission of equity.

.svg)

_0000_Layer-2.png)