How 5 Simple Frameworks Turn AI Into Your Competitive Edge

From executive summaries to brainstorming new ideas, prompt frameworks can make AI tools like ChatGPT more impactful. Explore 5 prompt engineering frameworks that help leaders get consistent results.

Prompt engineering frameworks reimagine vague AI prompts into clear, repeatable structures, defining context, role, task, and output, so leaders can get consistent, high‑quality results from AI tools like ChatGPT.

Prompt frameworks such as RISE, RTF, BAB, CARE, and CRIT give executives practical ways to apply AI prompts for decision‑making, executive summaries, board updates, and strategic planning.

By using structured AI prompts, business leaders can turn ChatGPT into a reliable thought partner that drives smarter decisions, sharper communication, and measurable productivity gains.

As Chief Strategy Officer of a regional manufacturing company, David wanted to leverage AI to better optimize the company's inventory. So he opened ChatGPT and started asking: "Help me use AI to optimize our inventory positioning and safety stock levels." But the output was just a wall of generic advice. Slop. He tried again, more specific: "Based on industry best practices, give me three AI-based methods for optimizing our safety stock levels." The output seemed clear and concise, so David copied/pasted the AI output and fired off an internal memo to his team.

His mistake revealed itself only a few hours later, when multiple team members wrote back to ask for clarification, pointing out inconsistencies in the memo, as well as a few bad sources and broken hyperlinks. Clarifying and correcting the memo took the rest of David’s day, by which time he realized he’d have been better off thinking through his strategy himself. Instead, he was now behind on his workload.

Across town, Jennifer—a CSO at a competing manufacturing firm—had the same goal. But she'd already sent her preliminary strategy deck and was reviewing feedback with her executive team.

The difference wasn't that Jennifer knew more about AI or had better access to technology. She'd simply figured out what David was painfully learning: using AI skillfully requires context. She used a framework to define that context: underscoring steps in the process and the expected outcome from her prompt.

Frameworks aren’t a new concept in business. From a balanced scorecard to OKRs, they have helped leaders tackle complex problems, align teams, and make better decisions for decades. They are effective because they bring order, consistency, and a shared language to how organizations operate.

Prompt engineering frameworks apply that same principle to AI, turning large language models (LLMs) and AI tools like ChatGPT from experiments into reliable business partners. And the payoff can be substantial: research shows that AI tools like ChatGPT can boost workforce productivity by an average of 14%, with some companies reporting gains of up to 400%. Compare that to the invisible tax of “workslop” (like what David produced), which HBR estimates can cost organizations millions of dollars every year.

In this article, we’ll explore five practical prompt engineering frameworks, general approaches that can be used across models or industry. These prompt frameworks are tools that knowledge workers can use right away to get clearer, more consistent results from AI tools.

Do AI Prompts Depend on Model Quality?

LLMs are the engines behind today's most advanced AI tools, and can directly affect how well your AI prompts perform. More advanced models can interpret context better, follow complex multi-step instructions (especially helpful in domains like math and coding), and deliver higher-quality, more reliable results.

The three leading commercial frontier models—Google's Gemini, OpenAI's GPT-5, and Anthropic's Claude—each offer distinct capabilities.

- Gemini integrates seamlessly with Google's ecosystem and handles multimodal inputs—text, audio, video, images, and code—grounding responses in real-time Google Search.

- Claude is used as the backbone for most of the AI coding applications and excels at long-form reasoning and safety-critical tasks. Parent company Anthropic markets Claude as an “enterprise‑grade” model with strong safety, long-context, and integration features that appeal to organizations.

- ChatGPT, powered by GPT-5, OpenAI's most advanced model, can automatically adapt to task complexity and shift into deeper reasoning modes when needed. It’s primarily positioned as a mass‑market consumer assistant, and in several areas is now reacting to moves from rivals such as Gemini and Claude.

But whether you're using ChatGPT, Claude, Gemini, or even an AI-powered app, the underlying structure of effective prompting remains consistent. Model selection alone doesn't guarantee results. The real differentiator lies in how you structure your requests.

The frameworks we’ll explore in this piece are model-agnostic by design. But what exactly are frameworks, and how do they work?

How Structured Prompting Works

Frameworks work like mental shortcuts that help professionals solve tough problems. When our brains are busy thinking about what questions to ask, how to organize our requests, and what kind of answer we need, we start making mistakes before we even begin the real work. Cognitive Load Theory shows us this limit: we can only handle so much information at once, and poor planning quickly uses up our brain power. Experts who study how people make decisions call this challenge "bounded rationality." Basically, we make worse decisions when we're rushed or dealing with too much information at once.

Structured prompting lets people focus their mental energy on what really matters: the actual content and main idea of their important question.

Business leaders have used this idea in popular frameworks like McKinsey's 7-S Framework, Porter's Five Forces, and the BCG Growth-Share Matrix. These frameworks work well because the structure lives on paper or a screen, which saves your brain power for deeper thinking. When we use this same idea with AI, we get quick results: organized, structured prompts work better than made-up-on-the-spot requests every single time. They're both higher quality and faster.

Without structure, most executives waste time going back and forth with AI, leading many to think AI tools don't actually work. But AI can do amazing things when you give it clear, organized instructions.

Let’s learn how the RISE framework integrates this structure into a repeatable process.

1. The RISE Prompt Framework (Role, Input, Steps, Expectation)

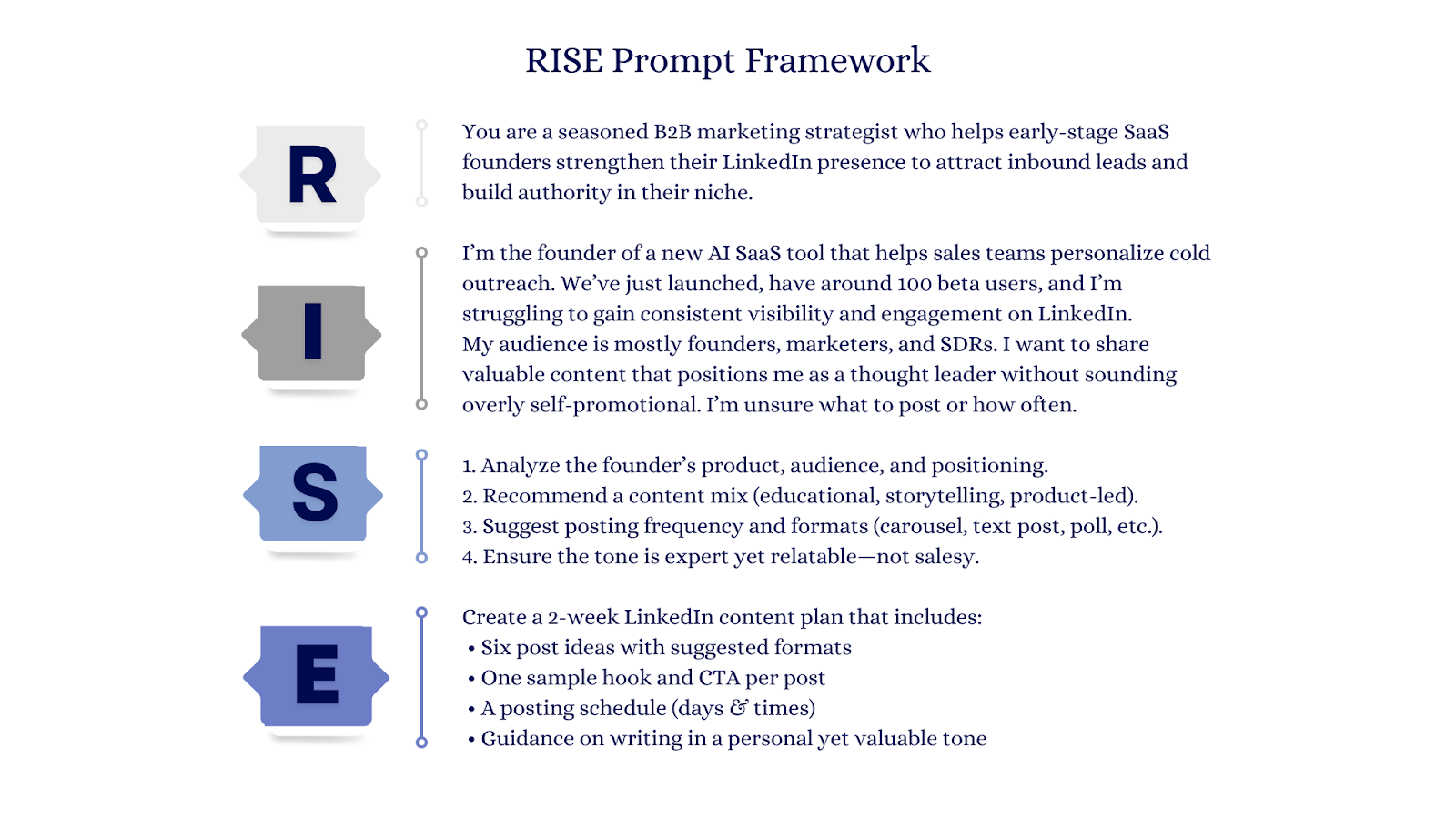

The RISE framework stands for Role, Input, Steps, and Expectation. It focuses on structuring and clarifying AI or ChatGPT prompts by ensuring the LLM knows who it should act as, what information to work with, the logical process to follow, and the exact format of the response.

Here’s how to apply the RISE framework:

- Role: Assign the AI model a specific role that matches the expertise required for the task, such as market strategist, financial analyst, or policy advisor.

- Input: Provide the most relevant and accurate information, organized in a way that is easy for the AI model to reference.

- Steps: Break down the process into logical, sequential actions that the AI model should follow to complete the task.

- Expectation: Specify exactly what the output should look like, including the format, style, and level of detail.

For example, the founder of a new AI SaaS is struggling to gain traction on LinkedIn and wants to create a 2-week content plan. Here’s how they might structure their prompt using RISE.

Source - An Example of the RISE Framework in Action: Breaking Prompts Into Role, Input, Steps, and Expectations.

2. RTF Framework: Turning Vague Requests into Structured Insights

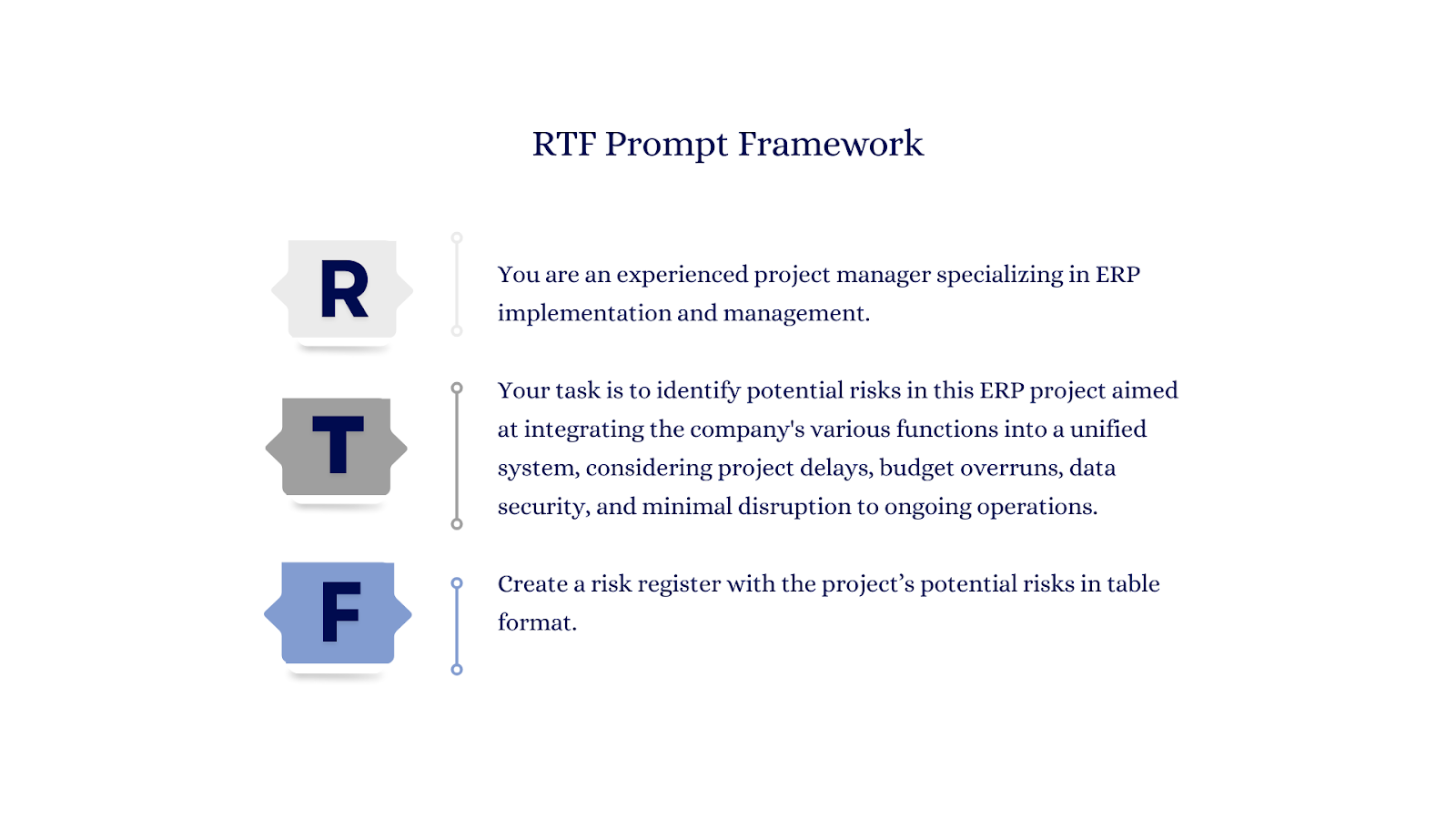

The RTF framework (Role, Task, Format) provides a straightforward way to shape requests, defining the role the AI tool should adopt, the task it should perform, and the format the output should take. RTF ensures the response is clear, structured, and ready to use in an executive setting.

- Role: Clearly define the role the AI model should take on, such as cybersecurity consultant, financial analyst, or marketing strategist.

- Task: Describe exactly what you want the AI model to do, focusing on a specific, actionable request.

- Format: Specify the structure you want for the output, such as bullet points, a table, or a concise executive summary.

For instance, a professional wants to identify potential risks in a major ERP project. Here’s how they could structure their prompt:

3. Telling a Story of Change With the BAB Framework

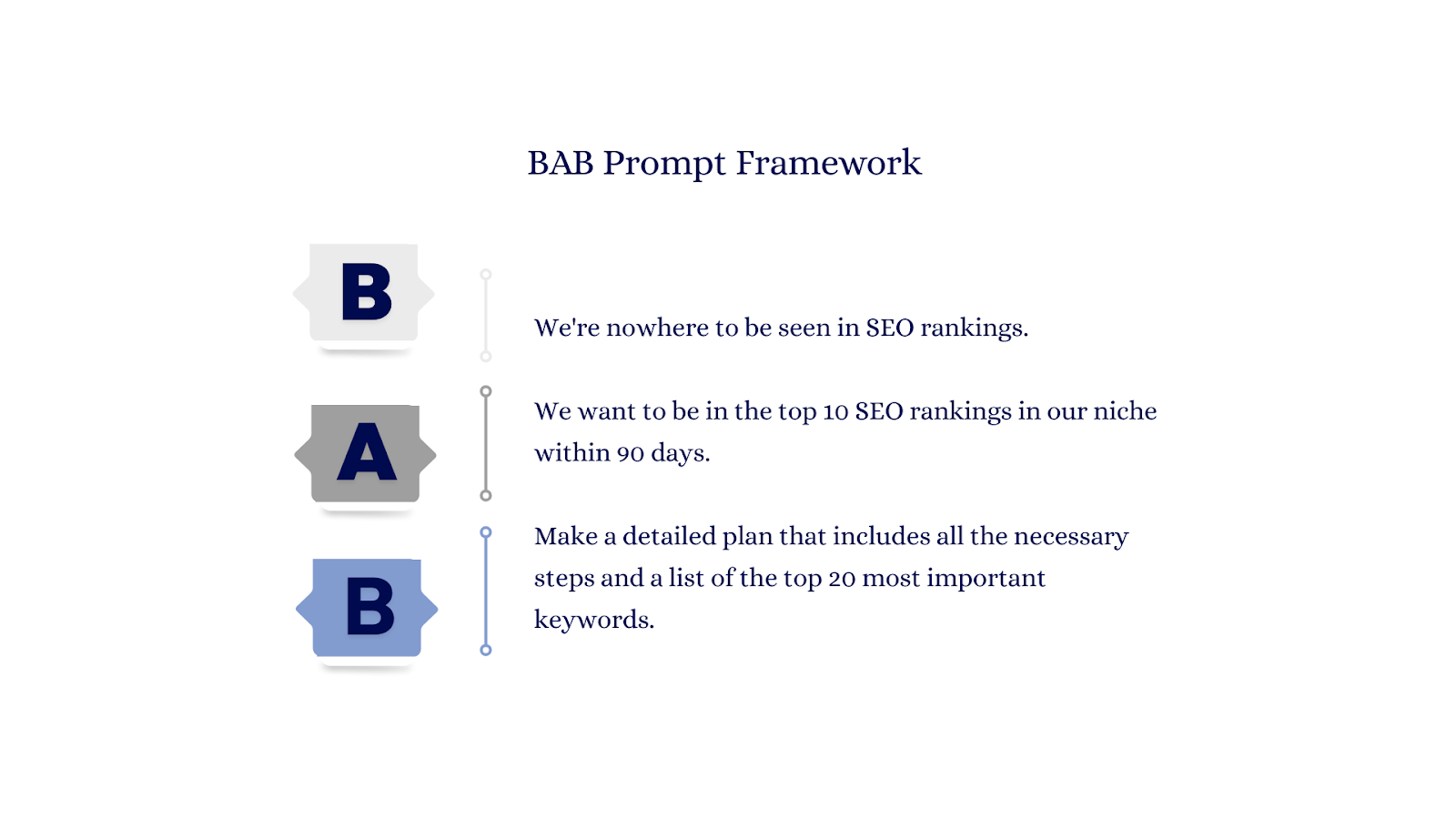

Setting goals and creating an actionable plan to work toward is a key part of leading a team. The BAB framework (Before, After, Bridge) makes it possible to frame prompts as a story of change. It asks the AI tool to describe the Before state (the current challenge), the After state (the improved outcome), and the Bridge (the path that connects the two). The BAB framework is perfect for communicating strategy, stakeholder presentations, and change‑management initiatives.

- Before: Clearly describe the current situation or challenge so that the AI model has full context for the problem.

- After: Define the desired future state or outcome in specific, measurable terms.

- Bridge: Outline the actions, strategies, or steps that connect the current state to the desired outcome

Take for instance an SEO professional who wants to create a detailed plan to boost their company’s SEO presence. Here’s how they could structure their prompt:

4. CARE: How Leaders Can Communicate Results with Clarity

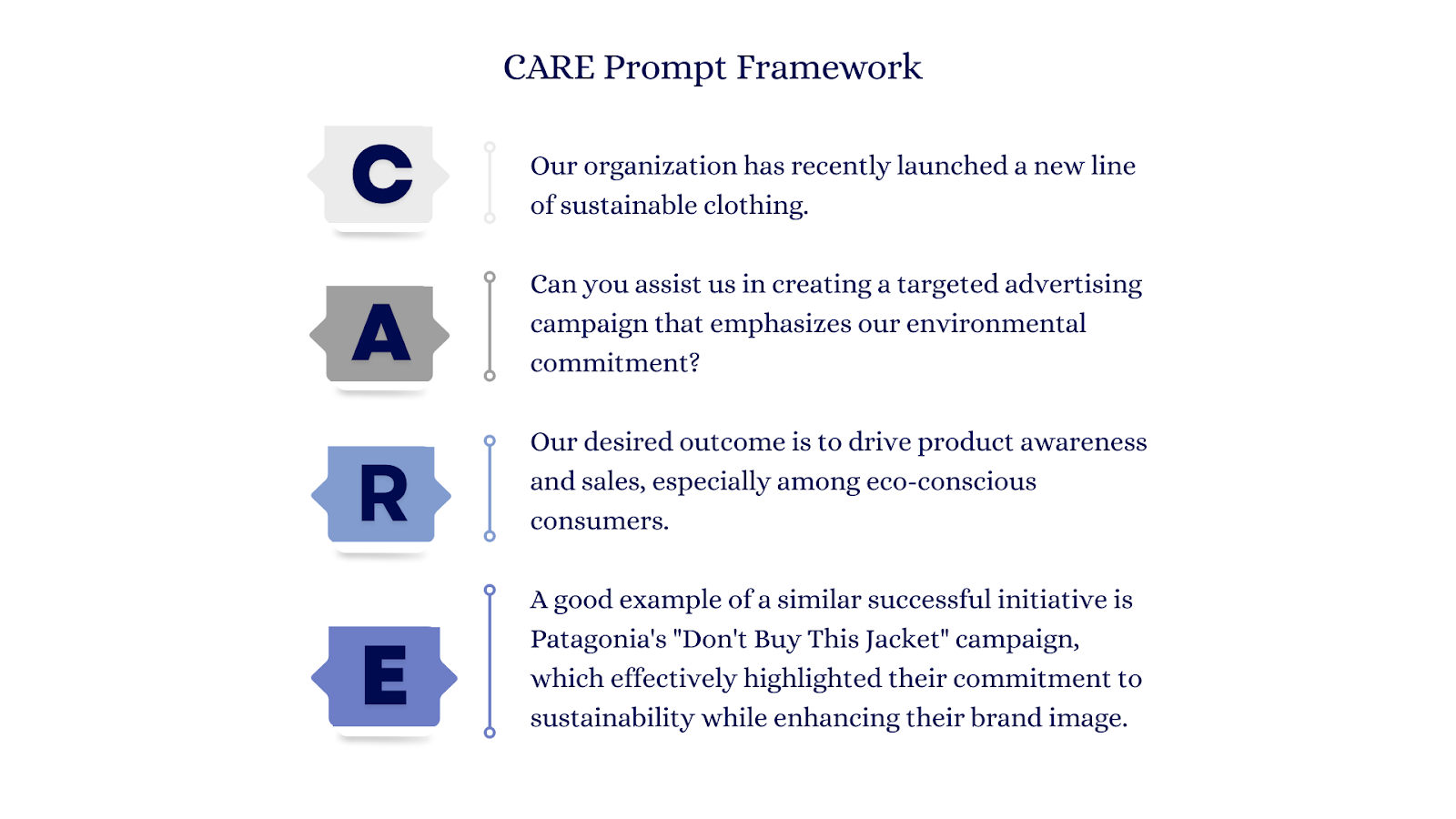

The prompt engineering framework CARE (Context, Action, Result, Example) helps redefine prompts into structured narratives. It lets the AI model consider the full picture: the Context behind the situation, the Action taken, the Result achieved, and a concrete Example to bring it to life.

- Context: Clearly describe the background or situation so that the AI model understands the environment in which the action is taking place.

- Action: Specify the steps or initiatives that were taken or should be taken to address the situation.

- Result: Define the measurable outcome or improvement that resulted or is expected from the action.

- Example: Provide a concrete case, story, or scenario that illustrates the result in a relatable way.

In this example, a marketing professional wants to create a targeted advertising campaign around their company’s environmental commitment.

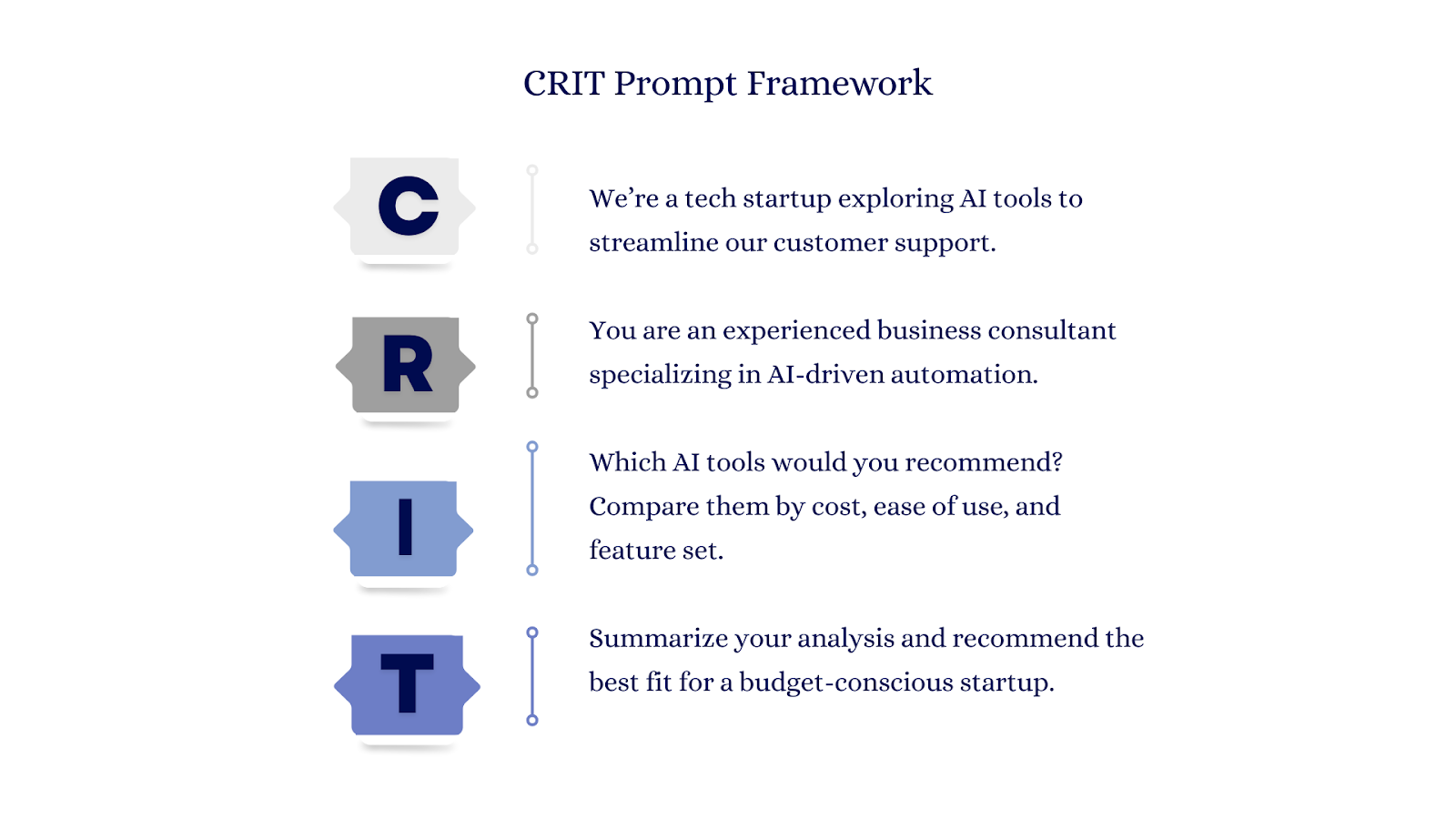

5. Turning AI Into a Boardroom Advisor with the CRIT Framework

So far, most of the frameworks we’ve seen don’t have a clear origin. The CRIT framework (Context, Role, Interview, Task) is different. It was developed by Geoff Woods, best‑selling author of The AI‑Driven Leader, to help executives get more strategic value from AI tools.

Woods created CRIT after realizing that traditional prompt examples treated AI like a passive assistant, when in fact it could act as a true thought partner. As he explains, “I realized it wasn't about asking AI questions, it was actually turning the tables and having AI ask me questions.”

CRIT works by guiding leaders through four deliberate steps: providing deep background information (Context), assigning a perspective or expertise (Role), allowing the AI to interview them with clarifying questions (Interview), and then defining the desired output (Task).

- Context: Share detailed background information so the AI model fully understands the situation, stakeholders, and objectives.

- Role: Assign the AI model a specific perspective or expertise, such as board member, market strategist, or investor.

- Interview: Invite the AI model to ask clarifying questions before producing a final answer, ensuring it has all the necessary details.

- Task: Clearly define the output you want, including the deliverable type, scope, and format.

Let’s say a tech startup co-founder wants to explore AI tools for their customer support line. Here’s how their prompt might materialize:

Turning Structured AI Prompts into Strategic Results

Remember David, spending his day untangling the mess his memo caused? And Jennifer across town, already reviewing board feedback? The differentiating factor was structure.

Frameworks don't eliminate professional strategic work, but they fundamentally change its efficiency.

We've explored how RISE provides systematic structure, how RTF delivers concise deliverables, how BAB frames change narratives, how CARE builds compelling cases, and how CRIT turns AI into a strategic thought partner. Each framework addresses the same challenge: converting expertise into outputs without burning hours on iteration. The structures exist, they're validated across industries, and they're available immediately.

Using them (or not) can be the differentiating factor between clean outcomes that move an organization forward, or the hidden cost of more piles of workslop.

.svg)

_0000_Layer-2.png)