McDonald’s Proves AI Isn’t Just About Tech—It’s About Governance. Here Are the 4 Pillars That Matter

Learn why these four areas of AI governance are more crucial than ever, and why a compliance-by-design approach avoids costly penalties and offers major advantages.

An effective AI governance strategy must address four critical areas: security and privacy threats, bias detection, hallucination management, and data provenance tracking.

Organizations implementing "compliance by design" within their AI governance plans gain competitive advantages while avoiding costly retrofitting and regulatory penalties.

With AI regulations carrying penalties up to $40 million and shadow AI usage increasing 250% in some industries, a comprehensive approach to AI governance is now a business necessity.

There's a moment in every corporate disaster when someone, somewhere, makes a decision that seems perfectly reasonable at the time but later becomes the fulcrum on which everything turns. For McDonald's, that moment came in 2019 when executives acquired a small Silicon Valley startup called Apprente.

Apprente specialized in voice recognition technology designed specifically for the chaos of drive-thru environments—the screaming children, idling engines, and overlapping conversations that had confounded earlier systems. McDonald's executives saw a technology that could process the 69 million customers who visit McDonald's daily with machine-like precision.

By 2024, the system was operational in over 100 U.S. locations. The company reported accuracy rates around 85% and projected significant labor cost savings.

However, what McDonald's executives overlooked was the distinction between technical capability and operational readiness. And most critically, McDonald's legal team hadn't realized that collecting voice data to "identify repeat customers" meant harvesting biometric information, which is subject to strict privacy laws.

The first viral TikTok video appeared in February 2024. A customer trying to order a simple meal watched in bewilderment as the AI system attempted to add 260 chicken nuggets to her order, then bacon to her ice cream, and then refused to process any corrections. The video garnered millions of views. Soon, #McDonaldsAIFail was trending globally, with dozens of customers sharing their own surreal interactions with the malfunctioning system.

But the social media embarrassment was just the overture. In March 2024, Shannon Carpenter filed a class-action lawsuit under Illinois' Biometric Information Privacy Act. The suit claimed that McDonald's had collected thousands of customers' "voiceprint biometrics" without their consent—a violation that carries a potential fine of up to $5,000 per incident. While the actual financial exposure remained to be determined through litigation, the potential scale was significant given the volume of drive-thru transactions.

By July 2024, McDonald's was forced to announce the end of its IBM partnership and the shutdown of all AI voice ordering systems "no later than July 26, 2024."

The McDonald's cautionary tale reveals something profound about our current business landscape. We are living through what might be called the "deployment gap"—the chasm between AI's technical capabilities and our organizational ability to govern those capabilities responsibly.

Here, we cover the new compliance environment caused by AI, as well as the four areas that any AI governance should address. By adhering to a compliance-first approach to AI implementation, organizations can mitigate the potential risks that AI poses and instead realize the valuable asset that AI should be.

AI Governance Strategy and Shifting Regulatory Environments

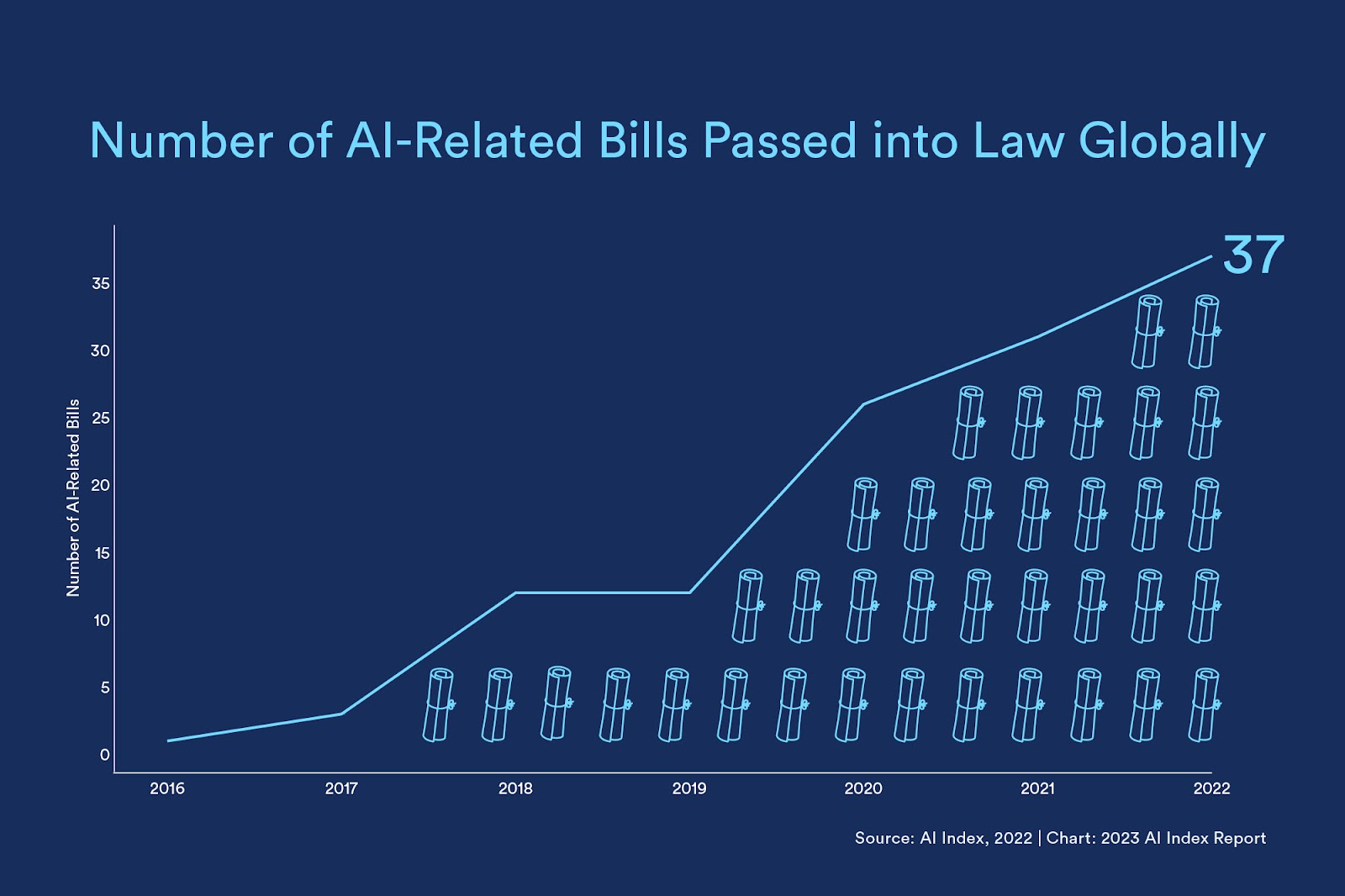

The regulatory landscape has undergone a fundamental shift in the wake of AI, with AI-related legislation increasing significantly since 2016. The EU AI Act now imposes penalties up to €35 million ($40 million) or 7% of global revenue for prohibited AI systems—enforcement began in February 2025. Local laws like NYC's Local Law 144 levies $1,500 fines per violation for AI hiring tools, with each day of non-compliance counting as a separate violation. And the DOJ is actively investigating AI compliance violations in healthcare, with False Claims Act penalties reaching into the hundreds of millions.

These are material business threats that demand a comprehensive plan to AI governance. Traditional risk management approaches fall short because they treat AI as just another technology. But AI presents unique risks that require specialized controls across four critical areas.

The most successful AI governance approaches address four fundamental areas:

- Security and privacy threats like prompt injection and shadow AI usage

- Bias and fairness issues that trigger regulatory enforcement

- Hallucinations that create legal liability

- Provenance challenges that expose organizations to copyright violations

Companies that build these controls into their AI deployments from the outset—compliance by design—gain sustainable competitive advantages, while competitors scramble to retrofit compliance into existing systems.

1. Security & Privacy, The Hidden Cost of Shadow AI

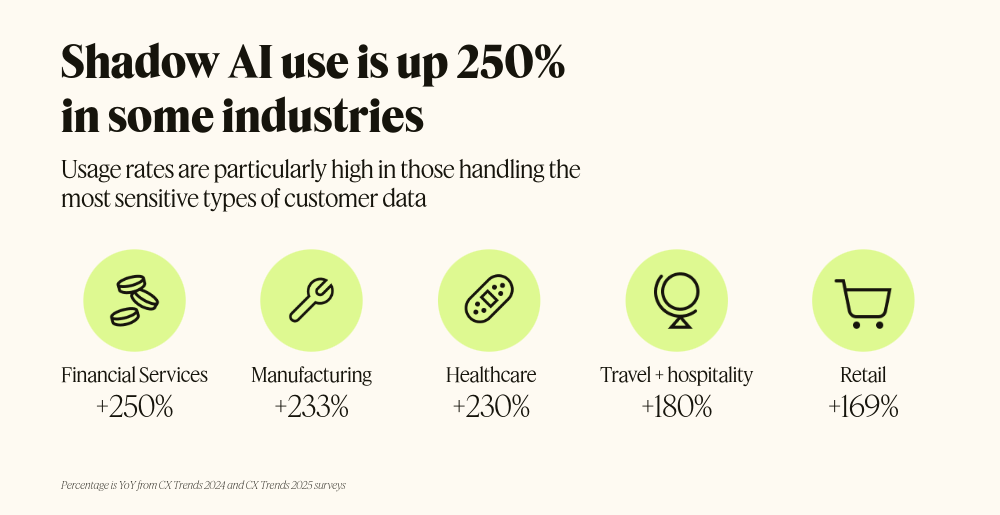

Security must be the foundation of AI governance. The average enterprise now runs 66 different generative AI applications, with employees adopting new tools at a rate that exceeds IT's ability to track them. This “shadow AI usage” results in massive compliance exposure, from data breaches to regulatory violations. Research suggests that the use of shadow AI is up by as much as 250% in some industries — a particular concern in sectors such as financial services, manufacturing, and healthcare.

Over one-third of workers admit to sharing sensitive company information with AI systems their employers never approved. UK companies report that one in five have already experienced data breaches from unauthorized AI use, with GDPR fines potentially reaching €20 million or 4% of global revenue. IBM's research suggests the solution lies not in prohibition but in integration within an AI governance plan—channeling shadow AI usage into approved systems with proper security controls.

Financial exposure multiplied quickly. Each day of non-compliance meant $1,500 in potential fines, creating weekly exposure of $10,500 per violation. Beyond monetary penalties, non-compliant organizations faced lawsuits from job candidates and human rights claims.

A practical AI governance approach addresses shadow AI and other security and privacy risks through comprehensive visibility and governance controls. Organizations can implement discovery tools to identify unauthorized AI applications, establish clear usage policies with security guardrails, and deploy data loss prevention systems designed explicitly for AI interactions. The framework should include employee training on approved AI tools, automated monitoring of AI-related data flows, and classification systems that distinguish between sanctioned and unauthorized AI capabilities.

2. Bias Detection, Mandatory Fairness

Legal imperatives have also fundamentally changed the bias testing requirements for AI governance and compliance. NYC's Local Law 144 requires bias audits for AI used in hiring decisions, while Colorado's comprehensive AI law, effective 2027, extends bias testing requirements to any "consequential decision." Organizations can no longer treat bias testing as optional—it's now a regulatory requirement.

“There’s a real danger of systematizing the discrimination we have in society [through AI technologies]. We have to be very explicit, or have a disclaimer, about what our error rates are like.”

—Timnit Gebru, Executive Director of The Distributed AI Research Institute

Consider New York City's Local Law 144, which requires bias audits for AI hiring tools. The law seemed straightforward when it took effect in July 2023: conduct annual bias audits, publish results, and notify candidates about AI use. Companies faced penalties up to $1,500 per violation, with each day of non-compliance constituting a separate violation.

Despite hundreds of organizations in NYC using AI hiring tools, only five companies had publicly published their required bias audit results by late 2023. Most companies fell into three categories: those that were completely unaware of their subject to the law, those that conducted audits but failed to publish the results, or those that simply ignored the requirements altogether.

Bias legislation exists for good reason. In one cautionary tale, the COMPAS algorithm used by judges to predict whether defendants should be detained or released on bail was found to be biased against African-Americans, according to ProPublica reporting. The algorithm assigned risk scores based on arrest records and defendant demographics, but consistently assigned higher risk scores to African-American defendants compared to white defendants with similar profiles. This bias directly translated into longer detention periods. In other examples, facial recognition systems from major tech companies failed to accurately identify darker-skinned women up to 34% of the time, compared to error rates of less than 1% for lighter-skinned men, according to MIT research. Research reveals how algorithms amplify existing societal prejudices with mathematical precision, making bias detection and mitigation essential in AI governance.

Building bias detection into AI governance requires a three-tier approach:

- Pre-deployment testing measures statistical parity across protected groups, equal opportunity measurements, and demographic parity assessments

- Continuous monitoring implements real-time bias detection algorithms, regular recalibration of fairness metrics, and impact assessments for model updates.

- Documentation requirements mandate audit trails of bias testing procedures, remediation actions when bias is detected, and public reporting for high-risk system.s

Companies that proactively integrate bias testing into AI governance from the start avoid the costly retrofit requirements that plague their competitors. More importantly, they demonstrate to customers, investors, and regulators that they're serious about responsible AI—a competitive advantage that increasingly drives purchase decisions and partnership opportunities.

3. Hallucinations, AI Inaccuracy as Legal Liability

The accuracy challenge created by AI hallucinations represents the most complex element of modern AI governance. AI hallucinations occur when artificial intelligence systems confidently generate information that is factually incorrect, fabricated, or nonsensical—presenting false outputs with the same authoritative tone as legitimate information. Hallucinations are particularly dangerous because they appear plausible and correct, making them difficult to detect without verification. Hallucinations have resulted in numerous legal investigations for organizations.

- In 2024, the U.S. Department of Justice issued subpoenas to several pharmaceutical and digital health companies, focusing on whether AI-generated documentation led to care that was excessive or medically unnecessary—a potential violation of the False Claims Act, with penalties reaching hundreds of millions.

- The Texas Attorney General settled with a company that sold generative AI tools used to create patient documentation and treatment plans, which were marketed as "highly accurate." The settlement alleged false and misleading claims about the AI's accuracy..

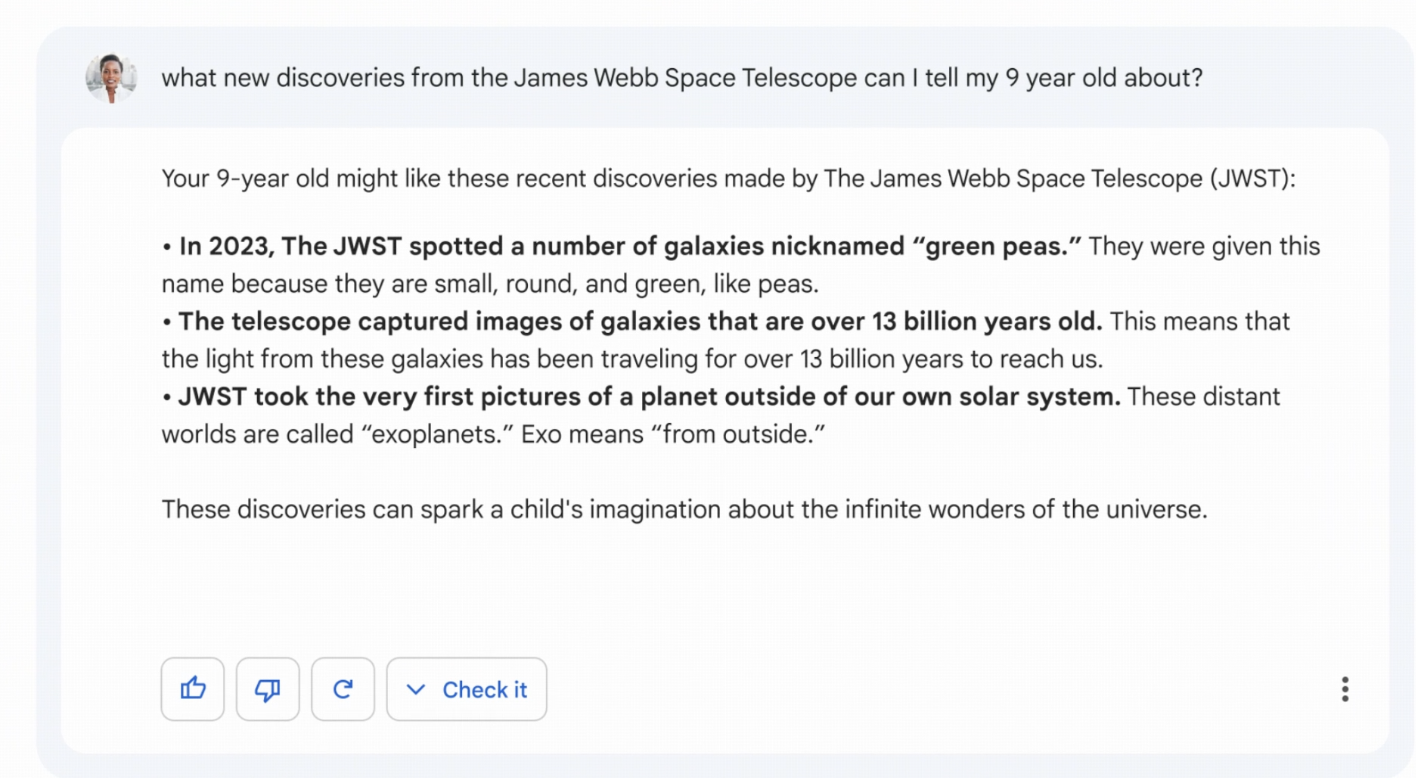

- Google learned a $100 billion (in market capitalization) lesson about AI governance in the most public way possible—during a live demonstration where their Bard chatbot confidently fabricated facts about the James Webb Space Telescope, causing Alphabet's stock to plummet 8-9% overnight.

Effective hallucination management within AI governance requires a three-pronged approach: prevention, detection, and correction. Organizations should implement a structured approach to selecting use cases that considers hallucination risks, deploy retrieval-augmented generation (RAG) systems that enable AI to cross-reference authoritative knowledge bases, and establish clear guardrails through the use of prompt templates and task restrictions.

Human oversight remains critical—employees must be trained to verify AI outputs, especially for high-stakes decisions. Meanwhile, risk-based review systems should flag suspect results for further investigation. Additionally, organizations should maintain feedback loops that enable AI systems to learn from their mistakes, thereby reducing future hallucination rates and building institutional knowledge about when and why errors occur.

4. Your Data’s Origin Story

Data provenance—a documented history of data as it moves through processes and systems—represents the most fundamental element of AI governance. A 2024 study in Nature Machine Intelligence, which audited over 1,800 AI datasets, found that more than 70% lacked valid license information and 50% contained licensing errors. This "crisis in misattribution" means most AI systems operate on legally questionable data foundations—a risk that sophisticated AI governance must address proactively.

Between March 17 and April 6, 2022, Equifax issued inaccurate credit scores for millions of consumers applying for auto loans, mortgages, and credit cards—a failure of data quality and lineage tracking. For over 300,000 individuals, the credit scores were off by 20 points or more, enough to affect interest rates or lead to rejected loan applications. Equifax leaders blamed the inaccurate reporting on a "coding issue" within a legacy on-premises server, where specific attribute values like "number of inquiries within one month" were incorrect. The fallout was swift and severe: stock prices fell approximately 5%, and the company faced a class-action lawsuit led by a Florida resident who was denied an auto loan after receiving a credit score 130 points lower than it should have been.

Companies that can't demonstrate clear data provenance face copyright infringement claims, licensing violations, and regulatory penalties. More strategically, organizations with robust data provenance capabilities can confidently deploy AI in regulated industries while their competitors remain paralyzed by legal uncertainty.

Building compliance-ready data systems requires three core capabilities within AI governance:

- Data source documentation with complete licensing audit trails, copyright and intellectual property verification, and usage rights mapping for each data source

- Lineage tracking containing immutable records of data transformations, model training data provenance, and output attribution capabilities

- Policy enforcement featuring automated compliance checking in data pipelines, approval workflows for new data sources, and regular audits of data usage rights

The Answer Is Proactive Compliance

Six months after McDonald's shut down its AI ordering system, the company quietly began exploring new AI initiatives across various areas of its operations. This time the approach was fundamentally different. Rather than rushing to deploy voice ordering technology, McDonald's announced plans to introduce AI across all 43,000 restaurants to tackle behind-the-scenes operational challenges.

“If we can proactively address those issues [related to AI technology] before they occur, that’s going to mean smoother operations in the future.”

—Brian Rice, McDonald’s Executive Vice President and Global CIO

The new strategy focuses on predictive maintenance for kitchen equipment, computer vision to verify order accuracy, and AI-powered tools for managers rather than customer-facing voice technology. McDonald's CIO, Brian Rice, told the Wall Street Journal that the company is developing a "generative AI virtual manager" to handle administrative tasks and alleviate stress on store employees.

The contrast with McDonald's earlier approach reveals something profound about competitive advantage in the AI era, demonstrating a more measured, proactive approach to AI deployment that prioritizes proven use cases over experimental technology. “If we can proactively address those issues [related to AI technology] before they occur, that’s going to mean smoother operations in the future,” said Brian Rice, Executive Vice President and Global CIO.

The McDonald's saga highlights that in the AI era, competitive advantage doesn't flow from having the most sophisticated technology. It flows from having the most sophisticated approach to governing that technology. Comprehending this distinction determines how much of AI’s competitive advantage organizations can unlock.

.svg)

_0000_Layer-2.png)