3 AI Governance Framework Questions Keeping Leaders Awake

Explore 3 critical AI governance framework questions keeping executives awake, from proving ROI to managing legal risks, with practical solutions for 2025.

While 72% of companies now use AI, only 18% have enterprise-wide councils with authority to make responsible AI governance decisions, creating significant risks as adoption accelerates.

The Texas Attorney General's settlement with Pieces Technologies demonstrates that AI governance framework transparency is no longer optional.

IBM research shows executives sensitive to the ethical costs of Gen AI deployment are 27% more likely to see their organization outperform on revenue growth.

What can a legal settlement in Texas teach us about AI governance frameworks?

In September 2024, the Texas Attorney General announced a "first-of-its-kind" settlement with Pieces Technologies, a Dallas-based AI company that promised hospitals its software had a "critical hallucination rate" of less than 0.001% and a "severe hallucination rate" of less than 1 per 100,000. Four major Texas hospitals were feeding real-time patient data to Pieces' AI system to generate clinical summaries for doctors and nurses treating patients.

There was just one problem: the investigation found the accuracy claims were "likely inaccurate and may have deceived hospitals about the accuracy and safety of the company's products." While the settlement carried no financial penalty, it required something far more valuable—total transparency about AI limitations, potential harms, and the exact methodologies behind any accuracy claims. A state enforcer had drawn a line in the sand: AI companies could no longer hide behind vague metrics when lives were at stake.

The Pieces case exposes a broader crisis facing organizations in 2025. Stanford's AI Index 2025 reports that 78% of organizations used AI in 2024, up from 55% in 2023, yet only 11% have fully implemented fundamental responsible AI capabilities.

Here, we cover four common questions that executives are asking about AI ethics and governance, as well as their solutions.

1. "How Do We Prove AI Governance Actually Pays Off?"

Organizations struggle to demonstrate measurable business value from responsible AI investments beyond basic compliance requirements. Gartner's 2024 survey reveals that 49% of survey participants cite difficulty in estimating and demonstrating the value of AI projects as the primary obstacle to AI adoption. This fundamental challenge makes AI governance framework budgets vulnerable when business pressures intensify.

AI governance frameworks can translate to ROI. The International Association of Privacy Professionals (IAPP) 2025 governance survey of over 670 individuals from 45 countries found that organizations leveraging existing privacy and compliance functions to support AI governance are more successful than those building entirely new structures. And IBM research shows executives who shy away from gen AI initiatives if they sense an ethical cost are 27% more likely to see their organization outperform on revenue growth.

A staged approach requires establishing baseline metrics before implementing an AI governance framework, then tracking improvements across financial impact, operational efficiency, and risk avoidance. IBM research suggests organizations measure ROI through:

- Labor cost reductions from hours saved

- Operational efficiency gains from streamlined workflows

- Increased revenue from enhanced customer experiences.

The research identifies both "hard ROI" (tangible financial benefits like cost savings and profit increases) and "soft ROI" (long-term organizational benefits like employee satisfaction and improved decision-making).

Demonstrating governance value remains challenging, as IBM's Institute for Business Value found that enterprise-wide AI initiatives achieved an ROI of just 5.9% while incurring 10% capital investment. However, organizations following AI best practices show significantly better results—product development teams that implemented four key AI best practices to an "extremely significant" extent reported a median ROI on generative AI of 55%.

2. "How Much Human Oversight Do We Actually Need?"

Organizations face mounting pressure to define appropriate human oversight protocols while managing costs and liability implications. Recent research reveals that as AI systems grow increasingly complex and autonomous, ensuring responsible use becomes a formidable challenge, particularly in high-stakes domains—this compounds when organizations introduce AI agents into workflows. The EU AI Act now mandates that high-risk AI systems must be designed to allow humans to oversee them effectively.

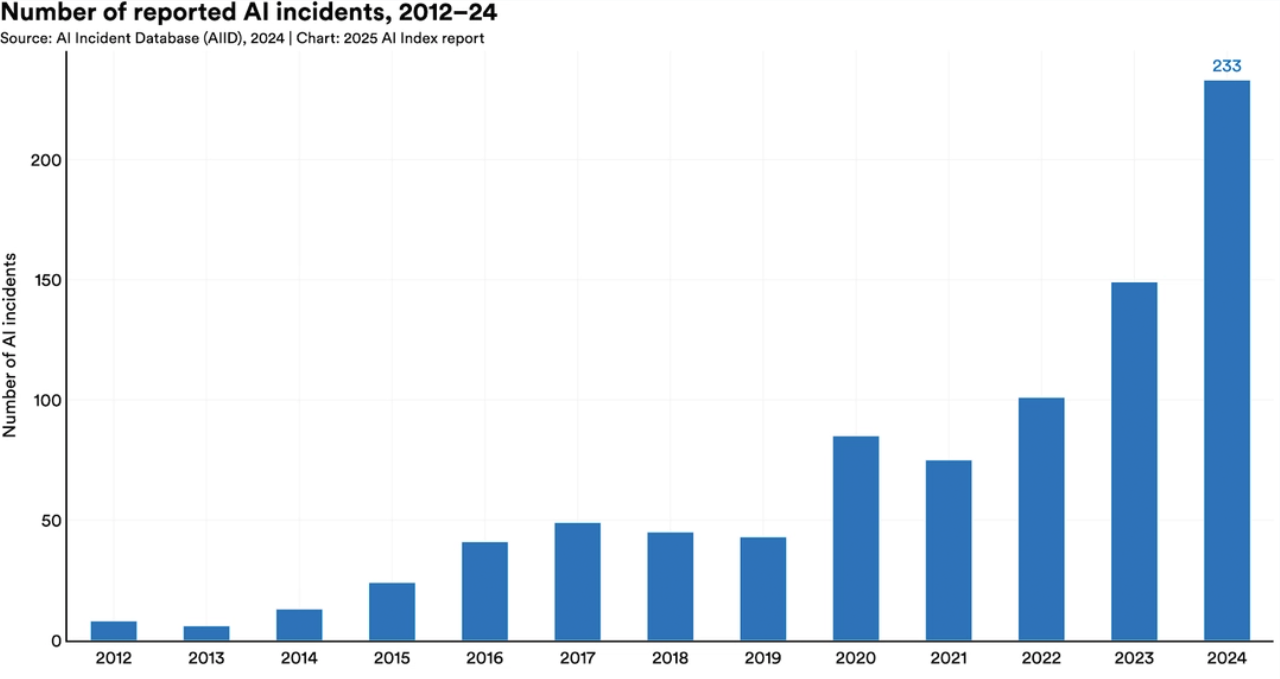

The challenge of managing AI errors while maintaining innovation momentum is difficult to balance. Stanford's AI Index tracking AI incidents found that the number of AI-related incidents rose to 233 in 2024—a record high and a 56% increase over 2023. Among incidents reported were deepfake intimate images and chatbots allegedly implicated in a teenager's suicide.

The challenge isn't simply whether to include humans in AI decision-making—it's determining the right level and type of intervention for different risk scenarios. McKinsey research shows that only 18% of organizations have enterprise-wide councils with authority to make responsible AI governance decisions. At the same time, only one-third of companies require AI risk awareness as a skill for technical talent. However, with the increasing number of regulations and legislation, passive monitoring is no longer sufficient.

The most effective AI governance framework implementations establish clear protocols based on risk tiers rather than one-size-fits-all approaches. Organizations should categorize AI applications into risk levels:

- Low-risk applications requiring human-on-the-loop monitoring with periodic reviews

- Medium-risk applications needing regular human validation of outputs

- High-risk applications demanding human-in-the-loop approval for critical decisions

Trust on the Line: Combatting Bias

Organizations must also address the challenge of automation bias—the tendency to over-reli on AI outputs—which has been documented in cases like the COMPAS recidivism prediction algorithm used in criminal justice that demonstrated significant racial bias in sentencing recommendations.

Enterprises face mounting pressure to implement robust oversight mechanisms as AI tools become increasingly embedded in business operations. Bias can perpetuate real-world harm while exposing organizations to reputational and regulatory risks. As EY's Alison Kay notes, "trust can be lost very quickly—and it's almost impossible to regain," making proactive bias mitigation both a commercial imperative and ethical obligation. Here are key actions enterprises can take:

- Establish internal AI audits first: Conduct regular reviews of all AI tools your organization uses before bringing in external auditors as a final check.

- Mandate board-level AI literacy: Ensure directors and senior leadership understand AI risks and bias issues, as "tone from the top" determines success

- Create cross-functional review teams to expand data literacy beyond IT departments, enabling diverse employees to identify bias in AI tools that impact your operations.s

- Implement employee feedback channels: Set up clear procedures for staff to report suspected AI bias in hiring, customer service, or other business processes.

- Require vendor transparency: Demand that AI suppliers explain how their systems work and provide evidence of bias testing before procurement.

- Establish AI ethics oversight: Create internal committees to review AI tool usage and set policies for high-stakes decisions affecting employees or customers.

3 "What About Our Legal Exposure?"

Legal and regulatory risks are escalating as AI deployment outpaces governance implementation. Stanford's AI Index shows that U.S. states are leading AI legislation amid slow federal progress—state-level AI laws increased from 49 in 2023 to 131 in 2024, while federal bills remain limited. The regulatory landscape varies significantly by jurisdiction, with Stanford's research showing regional differences in AI optimism, from 83% in China to just 39% in the United States.

IAPP's governance survey reveals that AI governance professionals come from different disciplines, with privacy professionals increasingly taking on AI governance roles. Organizations building AI governance programs from existing privacy functions adapt more successfully than those creating entirely new structures. The emergence of dedicated "Artificial Intelligence Governance Professional" certifications signals that AI governance is increasingly seen as a distinct profession with significant overlap with data privacy.

Organizations must establish comprehensive legal risk management addressing regulatory requirements across multiple jurisdictions. The EU AI Act carries potential fines up to €35 million or 7% of a company's worldwide annual turnover, whichever is higher, while various US state and federal regulations impose additional requirements. Companies need detailed documentation systems and audit trails to support regulatory investigations when required.

Recent enforcement demonstrates escalating legal risks that organizations must proactively address. In March 2024, the SEC fined investment firms Delphia and Global Predictions a total of $400,000 for making false claims about their AI capabilities—representing the first enforcement action against "AI washing." Delphia claimed it used customer data to power AI-driven investment strategies when it didn't, while Global Predictions called itself the "first regulated AI financial advisor" without backing up that claim. Regulators are actively enforcing AI-related compliance requirements, and organizations face significant financial penalties for misleading claims about their AI capabilities.

What’s the Right AI Governance Framework for Your Organization?

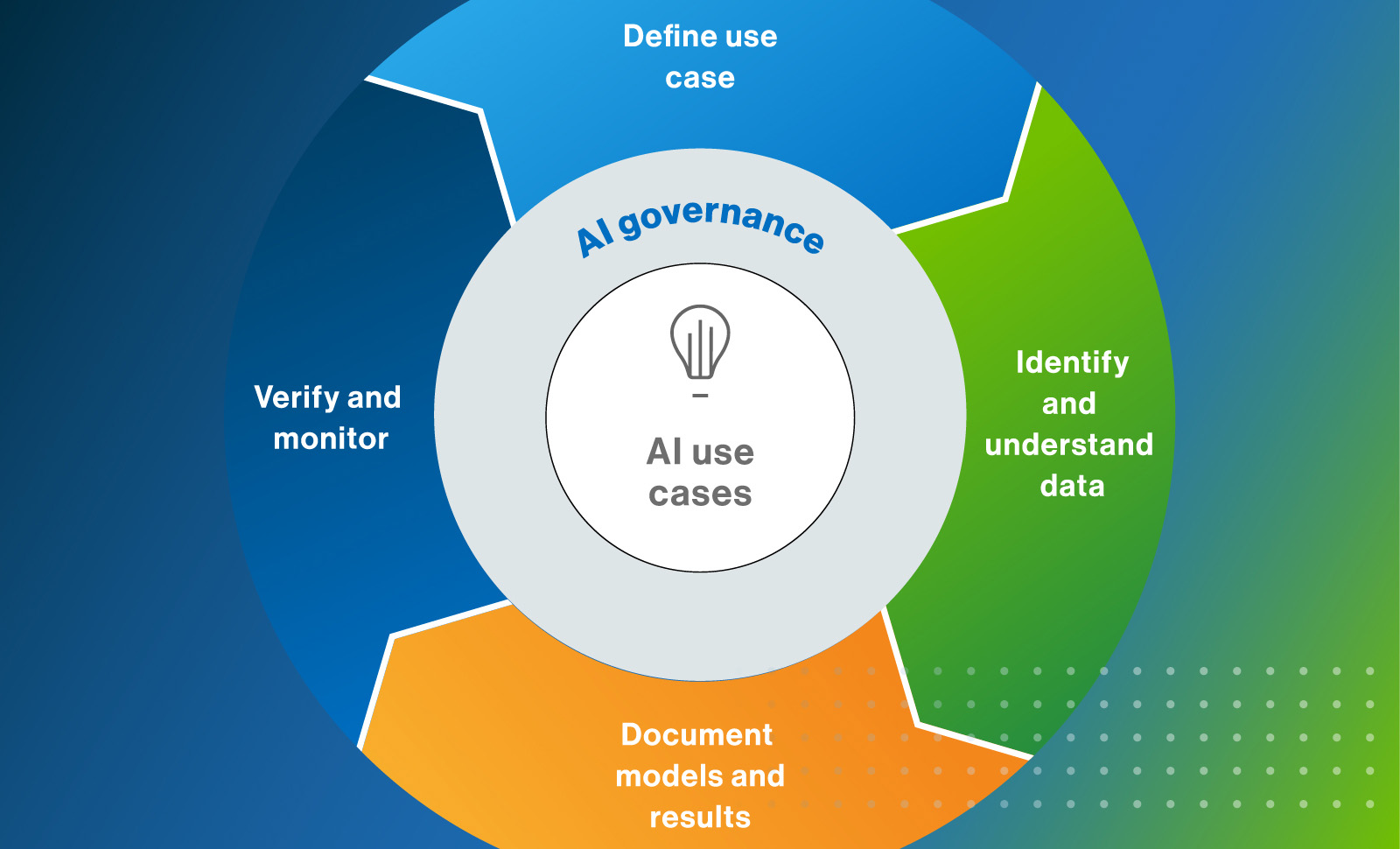

Organizations achieving measurable AI governance success implement comprehensive frameworks that balance innovation with responsible oversight. The most effective AI governance framework approaches share key characteristics that distinguish successful implementations from struggling ones.

Key Success Factors:

- Centralized Leadership with Cross-Functional Integration: Successful organizations establish dedicated AI governance committees with senior leadership championing initiatives. These frameworks integrate legal, risk, business, and technical expertise rather than operating in silos, as demonstrated by leading organizations that create enterprise-wide councils with actual decision-making authority.

- Risk-Based Classification and Controls: Leading frameworks categorize AI models based on potential impact, using standards like the EU AI Act for risk-based classification.

- Lifecycle Management and Continuous Monitoring: Organizations implementing ISO/IEC 42001 establish formal requirements, including risk assessment mandates, control implementation, and lifecycle oversight. This includes pre-deployment validation, real-time performance monitoring, and post-deployment impact assessment across the entire AI lifecycle.

- Compliance Integration and Documentation: Effective governance includes strict data privacy and security protocols aligned with regulations. Documentation requirements include AI model design specifications, performance monitoring logs, and compliance audit trails that demonstrate sustained compliance with regulators and stakeholders.

AI Governance Framework Examples

Three proven frameworks offer different approaches for organizational AI governance:

NIST AI Risk Management Framework: This voluntary framework helps organizations incorporate trustworthiness into AI systems through a four-function structure (Govern, Map, Measure, Manage). Enterprises benefit from its flexibility and comprehensive guidance for building oversight without prescriptive compliance requirements, making it ideal for organizations seeking adaptable governance approaches.

ISO/IEC 42001 AI Governance Framework: This international standard provides formal AI governance requirements, including risk assessment, control implementation, and lifecycle oversight. Organizations that achieve certification demonstrate mature AI management systems with built-in resilience and scalability, making it valuable for enterprises seeking credible third-party validation of their governance capabilities.

EU AI Act Compliance Framework: The world's first comprehensive AI legal framework establishes risk-based rules with specific human oversight requirements for high-risk systems. Any organization operating in EU markets or handling EU citizen data must comply, making this framework essential for global enterprises regardless of headquarters location.

Bridging the Governance Gap

The 2024-2025 period represents a critical inflection point where AI governance has shifted from optional best practice to mandatory business infrastructure. Stanford's AI Index data shows that while AI capabilities advance rapidly, the governance gap continues widening. Organizations implementing a comprehensive AI governance framework gain competitive advantages, while those delaying face escalating compliance costs and regulatory risks.

The Pieces Technologies settlement serves as a warning shot: transparency about AI limitations, potential harms, and accuracy claims is no longer optional.

Will your company implement governance proactively—or reactively under regulatory pressure?

.svg)

_0000_Layer-2.png)