4 Forces Reshaping AI Energy Management in 2025 and Beyond

AI infrastructure demands are reshaping global energy systems. From $7T in projected investments to community resistance, enterprises must plan for compute capacity, data locality, and regulation now.

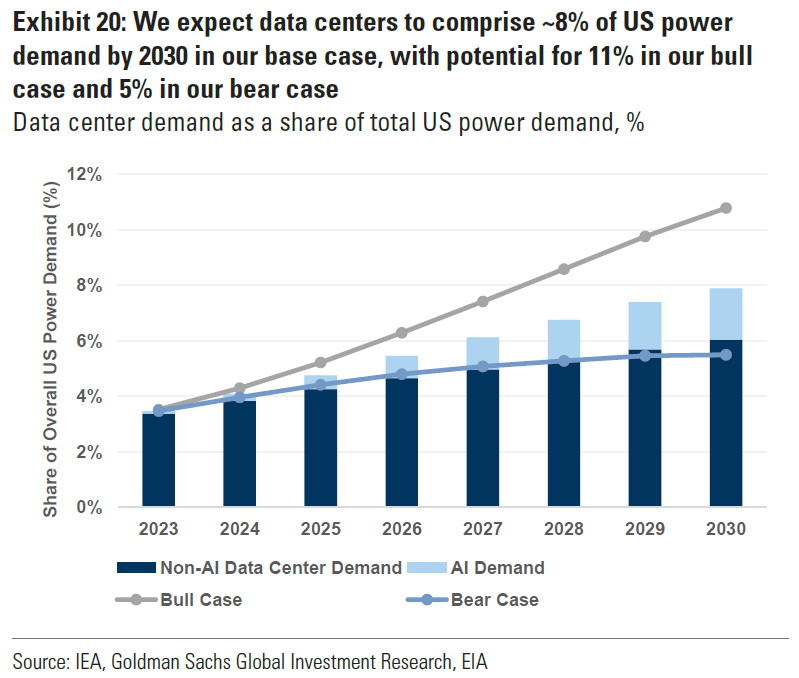

AI energy management has become the critical bottleneck in AI infrastructure development, with data centers projected to consume up to 12% of total U.S. electricity by 2028.

The concentration of AI infrastructure creates strategic dependencies, as evidenced by Nvidia's $600 billion market cap loss following DeepSeek's efficient AI model breakthrough.

Effective AI energy management requires enterprises to integrate regulatory compliance, community engagement, and geopolitical risk planning into their core AI strategy.

On a sprawling 485-acre site in King George County, Virginia—roughly the size of 370 football fields—construction crews will soon build one of the world's largest data centers.

The campus, when fully operational, will consume 1.2 gigawatts of electricity. To put that in perspective, this single facility will draw enough instantaneous power to supply one million U.S. homes, equivalent to roughly the entire output of one large nuclear reactor.

The scale is staggering, but the Virginia project represents just one piece of a much larger transformation reshaping the global economy. As artificial intelligence transitions from experimental technology to business-critical infrastructure, the race to build the physical foundation for AI has triggered the most significant infrastructure investments in modern history, reaching as much as $371 billion in 2025 alone.

Globally, demand for AI-related compute power is huge, predicted to cost trillions annually in the next five years. Already, major tech companies are making sweeping moves for data centers and ways to power them. But their strategies aren’t going unchecked; significant citizen-level resistance is fomenting, while geopolitical tensions and regulatory forces also affect the way forward. For the growing number of organizations incorporating AI in their strategies, AI energy management will dictate where/how AI is deployed, as well as new strategic considerations to key into.

The $7 Trillion Computing Arms Race

The numbers behind AI's infrastructure demands are massive. Research shows that by 2030, data centers are projected to require $6.7 trillion worldwide to keep pace with the demand for compute power. Data centers equipped to handle AI processing loads alone will require $5.2 trillion in capital expenditures, while traditional IT applications will require an additional $1.5 trillion.

This investment surge stems from AI's voracious appetite for computational resources. At 2.9 watt-hours per ChatGPT request, AI queries require about 10 times the electricity of traditional Google queries. Analysis suggests that global demand for data center capacity could almost triple by 2030, with about 70 percent of that demand coming from AI workloads.

The compute power value chain spans from real estate developers building data centers to utilities powering them, semiconductor firms producing specialized chips, and hyperscalers hosting massive amounts of data. Each faces the same fundamental challenge around AI energy management: deciding how much capital to allocate while remaining uncertain about AI's future growth trajectory.

1. AI Energy Management: The Critical Bottleneck

The existing U.S. electrical grid, much of which was constructed 50 to 70 years ago with components nearing or exceeding their intended lifespan, was not designed for AI energy management, particularly in supporting hyperscale data centers that consume hundreds of megawatts. As Nvidia CEO Jensen Huang summarizes, “AI is now infrastructure, and this infrastructure, just like the internet, just like electricity, needs factories.”

“AI is now infrastructure, and this infrastructure, just like the internet, just like electricity, needs factories.”

—Jensen Huang, CEO of Nvidia

Energy has emerged as the single most critical constraint in the development of AI infrastructure. The U.S. Department of Energy projects that data centers could consume as much as 580 TWh annually by 2028, representing up to 12% of total U.S. electricity consumption. Goldman Sachs research agrees, with 11% being their bull case. This represents a significant increase from the 176 terawatt-hours consumed by data centers in 2023, which accounted for 4.4% of total U.S. electricity consumption.

Tech giants are responding by making unprecedented investments in AI energy management. Google's recent deal with Kairos Power saw the tech giant will purchase power from a fleet of small modular reactors, with the first reactor online by 2030 and 500 megawatts total added to the grid through 2035.

"We believe that nuclear energy has a critical role to play in supporting our clean growth and helping to deliver on the progress of AI. The grid needs these kinds of clean, reliable sources of energy that can support the build out of these technologies."

—Michael Terrell, Google Head of Advanced Energy

Michael Terrell, Google's senior director for energy and climate, explained the strategic imperative: "We believe that nuclear energy has a critical role to play in supporting our clean growth and helping to deliver on the progress of AI. The grid needs these kinds of clean, reliable sources of energy that can support the build out of these technologies."

Google’s far from the only major tech company making these kinds of moves. Microsoft is pursuing a similar AI energy management strategy, with Constellation Energy restarting Three Mile Island to power Microsoft data centers. The company also recently expanded AI-capable data centers in Ireland and the Netherlands.

Organizations must model their AI compute demands for the next 5-10 years, accounting for both training and inference workloads that can scale exponentially. Companies that fail to integrate energy planning into AI strategy risk facing power constraints that could limit AI deployment capabilities. Strategic moves include diversifying infrastructure dependencies across multiple regions and providers, while considering renewable power partnerships and energy storage solutions.

Effective AI energy management also requires optimizing cloud costs and infrastructure efficiency. Organizations implementing FinOps principles with AI forecasting can reduce cloud costs by up to 20%, while improving visibility into energy consumption patterns across distributed infrastructure. This approach helps enterprises balance performance requirements with sustainability goals while managing the exponential growth in compute demands.

2. When Communities Push Back: The Politics of AI Energy Management

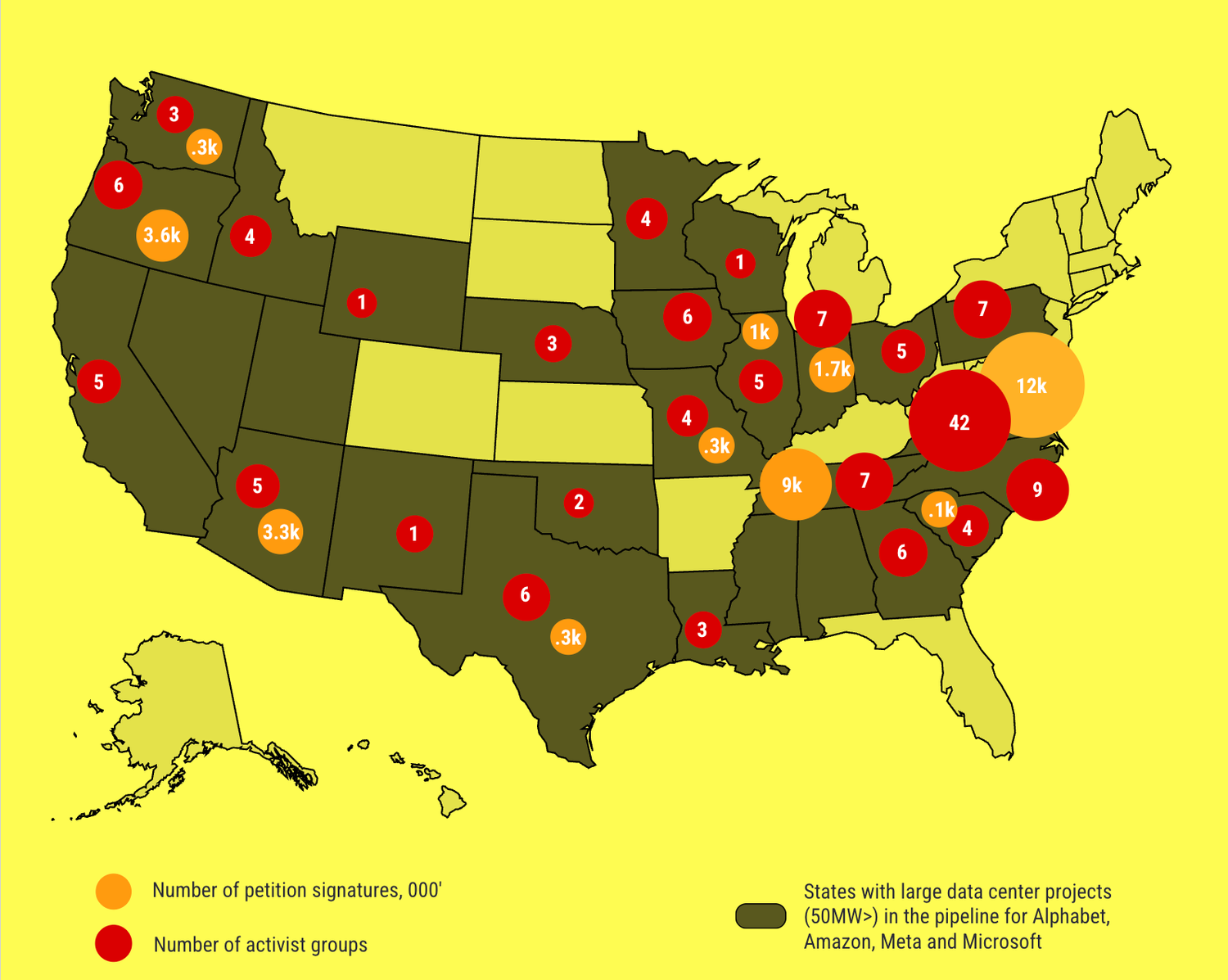

The massive scale of AI infrastructure is triggering unprecedented community resistance. Data Center Watch — an objective, nonpartisan research firm — reports that $64 billion in U.S. data center projects have been blocked or delayed by local opposition. In Virginia alone, $900 million in projects have been blocked and $45.8 billion in projects have been delayed.

Communities are grappling with legitimate concerns about grid strain, water usage, and quality of life impacts. In Bessemer, Alabama, a proposed 700-acre data center development has drawn fierce opposition from local residents citing concerns about quality of life, noise, pollution, and environmental impact. Despite vocal community resistance, the Planning and Zoning Commission approved the rezoning 4-2.

The opposition has proven both bipartisan and sophisticated. As Data Center Watch notes, "Republican officials often raise concerns about tax incentives and energy grid strain, while Democrats tend to focus on environmental impacts and resource consumption".

Meta's Louisiana project illustrates both the promise and the challenge of these initiatives in the US. The company is building a $10 billion data center on 2,250 acres of former soybean fields about 250 miles north of New Orleans. The facility will ultimately create just 500 permanent jobs, though employment will briefly swell to about 5,000 during construction.

Amidst opposition from many, Louisiana Governor Jeff Landry defended the project's job creation: "Five jobs was a big deal in this area. I don't know where the people are who complain about 500 jobs, but I'll take them in Louisiana. We will take every job we can get."

Companies planning AI energy management investments should engage regulators and communities early in the development process, conduct transparent environmental impact assessments, and consider distributed edge computing approaches that can bring computing closer to local communities without stressing centralized grids.

3. Geopolitical Stakes and Nvidia Disruption

Demand for AI infrastructure has created new geopolitical vulnerabilities, underscoring the strategic importance of legislation like the 2022 CHIPS Act, which authorizes $39 billion in subsidies for chip manufacturing on U.S. soil, along with 25% investment tax credits and $13 billion for semiconductor research. These vulnerabilities became evident again in August 2025, when Nvidia paused production of its H20 AI chips designed for the Chinese market after Beijing raised national security concerns about potential tracking capabilities. Nvidia reportedly asked Arizona-based Amkor Technology, which handles the advanced packaging of the H20 chips, and South Korea's Samsung Electronics, which supplies high-bandwidth memory for them, to halt production.

Another shock hit the AI infrastructure market in January 2025. Chinese startup DeepSeek released its R1 AI model, claiming to achieve ChatGPT-like performance for under $6 million in development costs — a fraction of the hundreds of millions typically spent by U.S. companies. The announcement triggered Nvidia's worst single-day decline since March 2020, with the company losing nearly $600 billion in market value.

The DeepSeek breakthrough raised fundamental questions about AI infrastructure assumptions. Marc Andreessen called it "one of the most amazing and impressive breakthroughs I've ever seen," while investors questioned whether massive infrastructure investments were necessary if AI models could be built more efficiently.

For enterprises relying on these chips—cloud providers, automakers developing autonomous systems, and manufacturers exploring AI optimization—this created uncertainty in supply chains. While projects didn't necessarily stop outright, companies faced potential delays, tighter capacity, and higher costs. The episode highlighted a crucial truth: even the most well-resourced enterprises are vulnerable to supply chain bottlenecks and geopolitical shifts.

The concentration of AI infrastructure creates strategic dependencies. AI chips are concentrated in approximately 30 countries, dominated by the U.S. and China, while "compute deserts" persist elsewhere. To combat this disparity, the European Union has launched a €200 billion initiative for AI infrastructure, services, and gigafactories, while the UK risks becoming a net importer of computing power, ultimately outsourcing innovation despite substantial R&D investments.

Amidst the disruptions, enterprise leaders should implement comprehensive geopolitical risk management by diversifying compute infrastructure across multiple regions and suppliers, monitoring national AI strategies and export controls, and developing contingency workflows for access disruptions. Forward-thinking organizations build relationships with multiple vendors and cloud providers while maintaining flexibility to shift workloads between regions as conditions change.

The key is treating AI energy management, data locality, and supply chain resilience as strategic assets rather than operational concerns.

4. Regulatory Forces Reshaping AI Energy Management

In the United States, executive orders are promoting streamlined data center permitting and semiconductor manufacturing subsidies. At the same time, the proposed EU Cloud and AI Development Act aims to address Europe's data center capacity gap through €200 billion in targeted investments.

Louisiana's experience with Meta illustrates regulatory influence on investment decisions. The state offered a 20-year sales tax exemption for data centers built before 2029. Susan Bourgeois, Secretary of Economic Development, was clear about the necessity: "The Meta folks made it clear to us from day one that in order for a project like this to happen in any state, that exemption or rebate—whatever the formula is—has to exist."

The incentive could cost the state "tens of millions of dollars or more each year, possibly through fiscal year 2059" according to the Legislative Fiscal Office. Yet Bourgeois defended the investment: "This wasn't about what the state would win or lose, just that one isolated sales tax. This was about we want to compete with Texas. We want to compete with Mississippi, Alabama, Georgia, South Carolina, all our Southern neighbors."

Companies operating across multiple jurisdictions must simultaneously navigate different AI compliance frameworks. The EU's risk-based approach affects how AI energy management systems are designed and deployed, while data residency requirements influence infrastructure location decisions. However, regulatory clarity also enables strategic planning—organizations that align with emerging standards early can gain competitive advantages in regulated markets. Companies should maintain cross-border compliance frameworks and conduct regular internal audits to ensure AI models and compute sourcing meet evolving regulatory standards.

The New Reality of AI Infrastructure

The transformation underway extends far beyond the deployment of technology. As the King George County data center continues to be constructed, AI infrastructure operates at a scale that reshapes regional economies, energy systems, and community dynamics. The facilities being built today will define competitive advantages for decades, making AI energy management a critical determinant of long-term business success.

Companies that secure reliable access to compute power, energy, and regulatory clearance will shape AI's future development. However, those who fail to plan an AI energy management strategy may struggle to deploy AI capabilities at a competitive scale.

The race for AI infrastructure ultimately comes down to strategic positioning in three critical areas: compute capacity, data locality, and regulatory alignment. Companies must treat infrastructure not as a supporting function, but as strategic capital. The organizations that succeed will be those that integrate infrastructure planning into their core AI strategy, building resilient, scalable, and compliant systems that can adapt to both technological breakthroughs and geopolitical shifts.

.svg)

_0000_Layer-2.png)