Open-Source AI Models May Be the Biggest Shift Since Cloud Computing

Open-source AI models are helping enterprises move faster, cut costs, and maintain control. See how Shopify, Walmart, Spotify, and AT&T are leveraging open ecosystems to drive innovation.

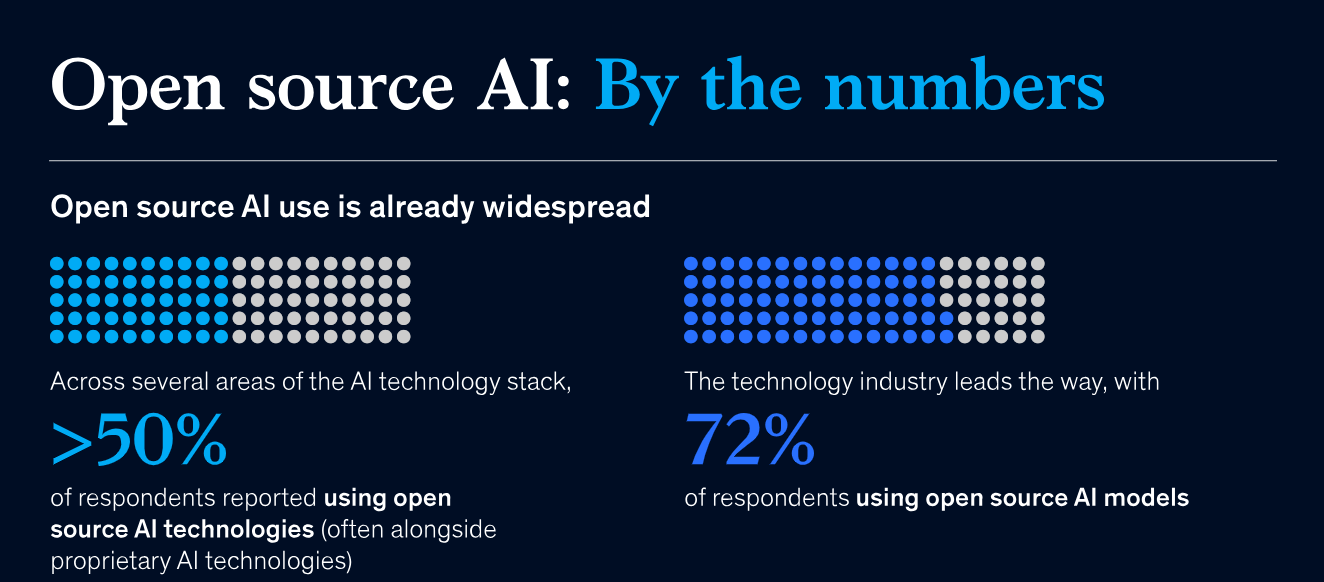

Many teams are turning to open-source AI to work faster and tailor models to their needs. More than 50% of organizations now use open-source AI across their tech stacks.

Enterprises are seeing real cost and efficiency gains from open-source AI workflows. For instance, AT&T cut its daily processing time from 15 hours to under five using this approach.

Demand for more control over data and infrastructure is accelerating open-source use. Nearly 76% of enterprises plan to expand their open-source AI investments.

Matt Colyer kept hearing the same thing from merchants: running an online business felt like juggling dozens of tasks at once. As Shopify’s Director of Product Management for Sidekick, he saw that strain everywhere. Shopify powers millions of businesses, from first-time entrepreneurs to global brands, and merchants were switching between product updates, customer questions, analytics, fulfillment, and marketing, often in the same minute.

Matt and his team could clearly see that merchants needed support that moved as fast as their workday. That insight sparked Sidekick, Shopify’s AI assistant built to act like “the co-founder you wish you had.” But creating an assistant that could understand each merchant’s catalog, customers, and workflow demanded a level of control that closed-source APIs couldn’t deliver.

The problem wasn’t just scale - it was speed of change. Shopify ships fast, the AI ecosystem moves even faster, and Sidekick required deep integration with merchant data and internal tools. Closed systems couldn’t guarantee consistent behavior or let the team test and adapt quickly. They needed to own the training process end-to-end.

So Shopify adopted open-source models, giving them the control and flexibility to fine-tune behavior, experiment safely, and evolve Sidekick as their platform changed.

“I think in general, the way to frame it is like open source, the power is in the control. It's like you're guaranteed to run this exact model with this exact set of training data with this exact outcome. So it's very predictable. You have way more control over the training process and like the post-training process.”

- Matt Colyer, Director of Product Management for Sidekick

With full control over their models, Shopify pushed syntax-validation accuracy to 99%, achieved near-human LLM judge performance, and made Sidekick far more reliable in real merchant conversations. For merchants, that meant quicker answers, fewer errors, and more time to actually grow their business.

Shopify’s experience is just one example of how open-source AI is reshaping enterprise technology. Major enterprises are already leaning in: Wells Fargo is piloting Llama-based workflows, Goldman Sachs is using open-source AI models for research and automation, and IBM is integrating open-source LLMs into internal productivity tools.

In this article, we’ll explore how companies from different industries are using open-source AI models to move faster, reduce costs, and retain control over their technology.

The Growing Momentum Behind Open-Source AI Models

Open source has been part of the enterprise stack for decades. It began with operating systems, databases, and frameworks, the core infrastructure that modern software is built on. Companies embraced it to move faster, avoid vendor lock-in, and retain control over their technology.

Today, that same momentum is accelerating again, this time toward open source AI. As closed systems create bottlenecks, enterprises are turning to models they can run themselves, update at their own pace, and tailor directly to business needs.

And the shift is already happening. In fact, surveys show that more than 50% of organizations now use open-source AI across their stacks.

Another reason for the rise in open-source AI adoption is the rapid expansion of the ecosystem surrounding it. Platforms like GitHub, Hugging Face, and other open-source communities have made it easier than ever to share models, collaborate on new ideas, and build tools that accelerate development.

This matters because models like open-source large language models (LLMs) give organizations the ability to run, customize, and audit the models themselves - unlike proprietary systems such as GPT, Claude, or Gemini, which operate behind vendor APIs and limit control over deployment and data handling.

Also, with new libraries, community-driven utilities, and research updates appearing almost weekly, teams have a steady stream of resources to build on. Taken together, this ecosystem dramatically lowers the barrier to adoption and makes open-source AI far easier to integrate into existing workflows without slowing the business.

The DeepSeek model is a great example of how powerful such an ecosystem can be. The company built its reasoning model by stacking its own technologies on top of existing open-source tools, among other community frameworks.

“DeepSeek has profited from open research and open source (e.g., PyTorch and Llama from Meta). They came up with new ideas and built them on top of other people’s work. Because their work is published and open source, everyone can profit from it.”

-Yann LeCun, Chief Scientist at Meta

This helped DeepSeek move quickly, keep model training costs low, and ship a competitive model even without access to advanced hardware. Simply put, open-source ecosystems give teams a head start instead of forcing them to reinvent everything from scratch.

Why Teams Are Turning to Open Source AI Applications to Move Faster

Open-source AI models drive enterprise innovation by enabling teams to build useful AI solutions faster and at lower cost. For instance, teams use open-source LLMs to adapt the architecture, tune it for their data, and experiment without waiting for vendor releases. This ability to customize, improve, and deploy AI however they want is what makes open-source AI projects a strong driver of innovation for businesses.

“By democratizing access to innovation ecosystems, open source puts the tools of creation into everyone’s hands… Innovation accelerates when expertise flows freely… The future of AI belongs to ecosystems, not empires.”

- Vilas S. Dhar, President, Patrick J. McGovern Foundation.

Here’s what makes open-source AI projects such a strong driver of enterprise innovation:

- Customization and Data Control: Open-source AI gives enterprises tighter control over their systems and their data. Teams can fine-tune AI models in-house, keep sensitive information internal, and personalize models to their specific needs. For example, Wells Fargo, one of the largest banks in the U.S., uses open-source models like Llama for internal workflows so customer and financial data stays securely inside its own environment. In many organizations, this control is becoming a priority, as 42% say that open-source generative AI provides greater transparency and access to more diverse data. Also, 89% of IT leaders consider enterprise open-source AI as more secure than other options.

- Scalability and Cost Efficiency: As workloads grow, open-source frameworks let enterprises scale across cloud, on-prem, and hybrid environments without being tied to a single vendor’s pricing. The Recording Academy, the organization behind the GRAMMY Awards, demonstrates this well: by building its AI Stories engine on top of the open-source Llama 2 model, the team can run and scale generative content across its environment without incurring unpredictable API usage fees during peak traffic on GRAMMY night. This type of flexibility gives teams smoother expansion options and more predictable costs. It's also great from a financial standpoint. In fact, 60% of decision-makers reported lower implementation costs with open-source AI than with similar proprietary tools. These savings often determine whether companies stick to small pilots or move to full-scale deployment.

- Faster Innovation and Collaboration: Open-source AI communities help enterprises move faster by sharing research, tools, and improvements openly. Teams can adopt new capabilities as soon as they’re available rather than waiting for closed vendors to ship updates. Intuit, a global financial-technology company, demonstrates this advantage: the company built its Intuit Assist platform using a mix of open-source models, allowing its teams to integrate new LLM advancements quickly and adapt them to tax, accounting, and marketing workflows. This access to rapid iteration lets enterprises bring new features to market far faster than closed systems typically allow.

Next, we’ll walk through three use cases that demonstrate how enterprises are applying open-source AI to move faster, cut costs, and keep complete control of their technology.

How Meta’s Open-Source Llama Model Helps Spotify Deliver Better Recommendations

As Spotify’s music library grew and user expectations rose, a subtle problem emerged: recommendations were becoming broader but were losing the personal touch that made them feel meaningful. Users didn’t want endless suggestions anymore; they wanted Spotify recommendations they could trust. To deliver that level of depth, Spotify needed a system that could control how data flowed, how the model behaved, and how each recommendation was generated.

For Spotify’s AI team, that realization led them to adopt open-source LLMs. Specifically, Meta’s Llama gave them the ability to run everything in-house, fine-tune every layer to match their editorial voice, and protect sensitive listening data without relying on external vendors.

Once the foundation was in place, the goal became clear: help listeners understand why a song, podcast, or audiobook might resonate with them. By combining LLaMA’s knowledge with Spotify's own domain expertise, the team created short, meaningful explanations that brought the human touch back to every recommendation.

Since the model was open source, Spotify could shape every stage of the recommendation generation process. They corrected attribution issues, reduced hallucinations (incorrect text generated by the model), aligned tone with the expectations of millions of listeners, and designed a safety workflow tailored to their content ecosystem.

The impact of open-source AI for Spotify was immediate. Users were up to four times more likely to click when a recommendation included a clear explanation. Engagement climbed, discovery expanded, and niche creators found new listeners. Spotify’s results show what deep customization and full data control make possible: open source tech lets them shape a model to their product, safeguard user data, and deliver an experience no off-the-shelf system could match.

Reducing Operational Costs at Walmart Using Open Source AI Models

Within Walmart’s massive retail and logistics network, a growing issue emerged. With more than 10,500 stores, thousands of fulfillment and distribution centers, 25,000 engineers, and millions of devices connected at any moment, the company’s infrastructure had become too large and too expensive to manage with traditional vendor-dependent systems.

For Dave Temkin, Walmart VP of Infrastructure, the signals were plain and simple. The tools they bought from different companies worked, but each one was unique, complicated, and costly to maintain. Engineers had to jump between many systems, and every change took too long. For a company built on low prices and efficiency, that wasn’t sustainable.

“We have to keep this business operating 24x7. We have an infrastructure that has grown organically over the years. We can’t just come in and say, ok, we’re going to rip something out and move to something new.”

- Dave Temkin, Walmart VP of Infrastructure

At that point, open source became the practical option. Walmart partnered with the open-source community to deploy SONiC, an open-source network operating system, across its equipment. SONiC lets Walmart manage all its network switches the same way, rather than treating each one as a special case. It also helped automate changes across thousands of devices, which made the whole system easier to keep running smoothly.

Walmart also added L3AF, a tool that acts like a smart control center. It helped engineers watch network traffic more clearly, spot issues faster, and fix problems much more easily across their massive footprint. Together, SONiC and L3AF created a single, simple system that cut costs and made Walmart’s network more reliable.

The business results were significant. By reducing its dependence on expensive vendor tools and simplifying its core technology, Walmart lowered operational costs and strengthened the stability of its entire network. As Temkin explained, “Every dollar that we save in the network is going out to save our customers money, and that’s paramount to everything else.”

With open-source technology, Walmart showed that even one of the world’s largest companies can run a global network at lower costs, with greater control and more flexibility—proving that open-source systems deliver measurable ROI at enterprise scale.

AT&T Weighs Open Source vs Closed Source AI to Improve Speed and Cut Costs

AT&T manages one of the largest customer networks in the world, where even small delays multiply across millions of interactions. This pressure was visible in AT&T’s customer service pipeline.

Every year, nearly 40 million calls contain clues about churn, product issues, and emerging trends. For a long time, teams reviewed call summaries manually and sorted them into more than 80 categories. The process was reliable but slow.

AT&T turned to large language models like GPT-4, and early gains were clear, helping the company save 50,000 customers a year. But the approach came with serious constraints: high compute costs, long GPU queues, and almost 15 hours to process one day of summaries - delays that slowed innovation at AT&T’s scale.

Interestingly, that’s where the team saw an opportunity. Rather than continue paying for a single large, expensive model, they built a flexible, multi-model workflow they could control end-to-end at a much lower cost. They combined several open-source AI models, each tuned to a different complexity level, and ran them through a unified pipeline.

Simple calls were routed to smaller, faster models, while more complex cases were routed to increasingly capable models. The largest models in the pipeline handled the most challenging tasks. This tiered approach balanced cost, accuracy, and speed in a way a single-model system simply couldn’t match.

Processing time dropped from 15 hours to under five, costs fell to 35% of the GPT-4 workflow, and accuracy held steady at 91%. For AT&T, this wasn’t just a technical upgrade.

It changed how teams within AT&T worked. Data science, engineering, and operations could now iterate and deploy improvements in the same cycle, mirroring the rapid experimentation and shared learning that define open-source AI ecosystems. The result was a system that moved faster, cost less, and gave AT&T’s teams far more control - unlocking faster innovation and tighter collaboration across the business.

Open Source Is Becoming the Competitive Edge in Enterprise AI

Just as Shopify reset the pace of its search engine by moving from a rigid, proprietary backend to an open-source foundation, enterprises across every industry are discovering the same advantage. Open-source ecosystems give teams the freedom to fine-tune models, integrate machine learning, and ship improvements at the speed their business demands.

Overall, open-source AI adoption is accelerating, with 76% of organizations planning to expand their use of these technologies. The companies moving fastest today are the ones choosing ecosystems they can shape, audit, and improve themselves.

.svg)

_0000_Layer-2.png)