How CIOs Use AI Partnerships to Escape Vendor Lock‑In

Explore how collaboration and partner ecosystems are emerging as key solutions to vendor lock-in, helping enterprises scale and innovate faster through open, integrated AI frameworks.

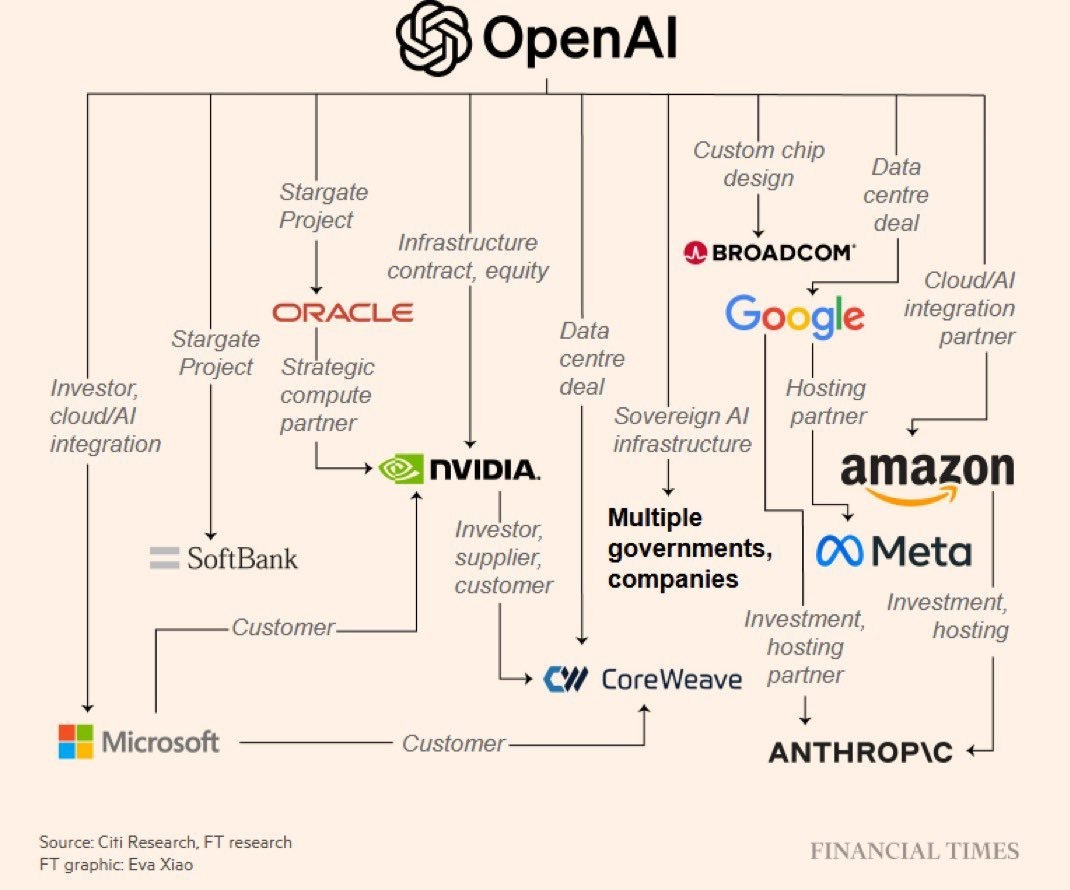

Nearly 7 in 10 CIOs worry about public cloud lock-in, and companies like OpenAI are expanding that lesson across the AI stack by diversifying chips, models, and infrastructure to boost flexibility and innovation.

As AI adoption accelerates, partner ecosystems are becoming essential to reduce technical risk, improve efficiency across the AI infrastructure stack, and strengthen governance and compliance.

From 100× workflow gains to measurable productivity boosts, companies like Tines, Syneos Health, and Barré Technologies are proving that AI orchestration is the key to scaling innovation, improving oversight, and turning collaboration into a competitive edge.

In early October 2025, OpenAI took a decisive step toward independence. After years of relying almost entirely on Nvidia’s GPUs, the company announced a multibillion-dollar partnership with AMD to purchase 6 gigawatts of its new MI450 chips by 2030. The move wasn’t just about hardware diversification; it was about freeing itself from vendor lock-in.

For years, enterprises have depended on trusted technology partners to keep their systems and enterprise AI platforms running. But that reliability often comes with strings attached. Nearly 7 in 10 CIOs say they’re worried about vendor lock-in in the public cloud, reflecting how even strategic alliances can quietly turn into structural dependencies.

Now, a growing number of AI leaders are rethinking that model. OpenAI’s latest partnership signals a deliberate shift away from single-stack dependence - not because companies can’t build or control the entire stack, but because doing so limits speed, flexibility, and innovation. The future isn’t about owning every AI infra layer; it’s about orchestrating across the AI technology stack through partner ecosystems.

In fact, Gartner predicts that by 2026, 65% of IT organizations will adopt unified data ecosystems, cutting their vendor portfolios in half while improving cost efficiency and speed. As enterprises move toward this model, one approach is emerging as a clear advantage - collaboration.

You might be wondering: What is an example of a partner ecosystem? Consider how OpenAI has built one of the most dynamic networks in the AI industry. Its collaborations span hardware, cloud, and orchestration partners that work together to expand performance, flexibility, and control across the AI technology stack. These kinds of partnerships show how enterprises can innovate faster, reduce vendor lock-in, and build AI that scales responsibly through collaboration rather than isolation.

In this article, we explore how leading companies are using AI orchestration and partner ecosystems to overcome vendor lock-in, accelerate deployment, and turn AI from isolated pilots into scalable business impact.

The Shift from Building AI Infra Alone to Building Together

As AI adoption increases, collaboration is becoming a core driver of progress. In particular, partner ecosystems that connect infrastructure, models, and data are emerging as the foundation of enterprise AI.

Here are three reasons why having an AI partner ecosystem is critical for enterprises:

- Reducing AI Risk While Accelerating Business Impact: Enterprises want AI systems that are both powerful and practical, yet few have achieved it. As of 2023, only 42% of large companies have actively deployed AI, and many cite limited skills or data complexity as top barriers. Partner ecosystems help close that gap by connecting trusted infrastructure, leading models, orchestration tools, and specialized expertise. Simply put, they make AI easier to deploy and scale while reducing operational risk.

- Cutting Deployment Time and Speeding Up Innovation: Efficiency is emerging as one of the clearest advantages of AI partner ecosystems. Microsoft’s re-imagined Marketplace shows how integration can translate directly into impact: reducing the configuration time of AI apps from nearly 20 minutes to just 1 minute per instance. By unifying thousands of cloud and AI solutions under one ecosystem, organizations gain faster deployment, simplified management, and consistent performance across their AI infra and enterprise AI platforms. The result is less time spent on setup and more on innovation, allowing enterprises to scale smarter and realize business value faster.

- Building Governance and Trust Into Every Layer of Enterprise AI: An EY survey shows $4.4 billion combined losses from AI rollout, as nearly all large companies deploying AI have faced early financial setbacks due to rushed rollouts, compliance mistakes, and immature systems. Partner ecosystems help reduce these risks by embedding governance, auditability, and transparency into every layer of the AI stack. With shared oversight and built-in controls, enterprises can scale innovation while maintaining accountability and public trust.

Next, let’s walk through three case studies that show how leading companies are putting these principles into practice, demonstrating how AI partner ecosystems drive innovation, speed, and governance at scale.

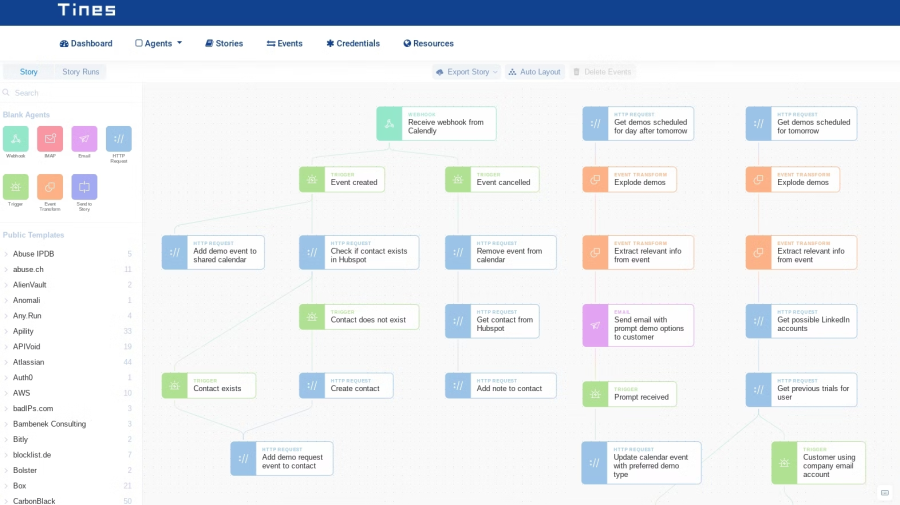

Tines, Anthropic, and AWS: A Partner Ecosystem for Scalable AI Automation

For years, risk and IT teams have faced a difficult trade-off: powerful automation tools that required deep engineering expertise, or simple platforms that couldn’t manage the complexity of cutting-edge operations. Tines, a Europe-based workflow orchestration platform, set out to eliminate that risk-heavy compromise.

Its mission: make enterprise-grade AI automation accessible to non-technical teams without expanding the organization’s risk surface. To achieve this, Tines turned to Anthropic’ s Claude, integrated through Amazon Bedrock, to power a new generation of intelligent workflow automation.

This partner ecosystem enabled Tines to embed Claude’s reasoning and code-authoring capabilities directly into its platform, while keeping data securely within AWS infrastructure. This AI orchestration between model, infrastructure, and application layers made automation not just faster but safer.

“Amazon Bedrock made integration straightforward from a technical, security, and infrastructure perspective. We love that it didn’t expand our risk envelope.”

- Stephen O’Brien, Head of Product at Tines

The impact was immediate. With Claude powering features like Automatic Transform Mode and Workbench Copilot, Tines cuts 120-step workflows down to a single action. Teams now move up to 100 times faster and work with 10 to 100 times more efficiency. By uniting advanced AI models within a secure cloud foundation, Tines turned automation into a true force multiplier for enterprise productivity.

AI Orchestration in Action: How Syneos Health Reimagined Clinical Operations

At Syneos Health, a global biopharmaceutical services firm running more than 500 clinical trials across 9,000 sites, the challenge was speed and complexity. Each study involved massive datasets, strict regulatory documentation, and collaboration among thousands of research partners.

Traditional systems made it difficult to move quickly or maintain oversight. To scale effectively, Syneos needed a partner ecosystem that could connect data, models, and infrastructure in a coordinated, intelligent way. That ecosystem came together through Microsoft and OpenAI.

Built on Azure, Syneos combined Azure Data Lake, Databricks, and Azure OpenAI Service into a unified AI orchestration layer that streamlined analytics, forecasting, and documentation workflows. OpenAI’s AI models provided the reasoning and automation capabilities, while Microsoft’s cloud foundation delivered the scale, governance, and security needed for enterprise-grade operations.

Within 9 months, the company deployed a fully orchestrated AI environment that demonstrated how collaboration across infrastructure, models, and applications can accelerate progress without compromising compliance. AI-driven insights now produce trial site lists within 24 to 48 hours, a task that once required months of manual work, cutting activation time by about 10%.

“Every day that we can accelerate development means more patients can get access to a therapy or potentially receive a lifesaving cure.”

Michael Brooks, Chief Operating Officer, Syneos Health.

This partnership is a great example of how AI orchestration multiplies value. By connecting trusted partners across the model, data, and AI infra layers, Syneos turned a fragmented workflow into a coordinated, efficient system, proving that in enterprise AI, integration is the new competitive advantage.

AI Partner Ecosystems Build Trust Across Every Layer of the Stack

Another key factor in scaling enterprise AI isn’t just speed; it’s trust. In Hungary, Barré Technologies saw this concern up close. As an enterprise software provider specializing in document and data management for regulated industries, the company faced a growing tension between automation and accountability. Clients wanted faster digital workflows, but strict compliance standards meant every step still had to be traceable, explainable, and secure.

To close that gap, Barré turned to IBM to build an AI orchestration framework centered on governance. Using IBM watsonx.ai and Cloud Pak for Data, the company integrated advanced foundation AI models such as Mistral and Llama 3 into a private, on-premise environment. This setup made it possible for Barré to develop AI assistants that handle invoice processing, regulatory navigation, and document validation through natural language, boosting efficiency without sacrificing oversight.

“Our target market sectors often handle highly sensitive data, frequently under strict government regulation, and it is important for them to keep data secure. The on-premises model, enabled by the lightweight nature of the LLM, provides confidence that the Barré AI assistant offers the high-security features they need.”

- János Prikk, Business Development Leader at Barré Technologies.

The impact was immediate. Productivity rose by 30%, translating into roughly $90,000 in annual savings and a full return on investment within thirteen months. More importantly, Barré demonstrated that the next phase of enterprise AI isn’t just about building smarter systems; it’s about orchestrating trust at scale using partner ecosystems.

The Companies That Build Together Are Pulling Ahead in the AI Race

The three case studies we discussed make one thing clear: the future of AI is not just about building smarter models but about building partner ecosystems that support them. As AMD CEO Lisa Su said, “The future of AI is not going to be built by any one company or in a closed ecosystem. It’s going to be shaped by open collaboration across the industry.” Across sectors, the organizations moving fastest are the ones connecting partners, infrastructure, and data into unified ecosystems where speed, trust, and governance reinforce one another.

That same transformation is unfolding at the top of the AI stack. Following its partnership with AMD to diversify compute supply, OpenAI recently announced a collaboration with Broadcom to design 10 gigawatts of custom AI accelerators. Together, these partnerships mark a shift from dependency to orchestration; embedding intelligence from silicon to software and signaling where enterprise AI is heading next: toward open, adaptive infrastructure built on collaboration rather than control.

.svg)

_0000_Layer-2.png)